Since May, the Article Feedback Tool has been available on 100,000 English Wikipedia articles (see blog post). We have now kicked off full deployment to the English Wikipedia at a rate of about 370,000 articles per day and will continue at this rate until deployment is complete.

We wanted to take a moment to briefly recap what we’ve learned so far, what lies ahead, and how we can work with the community improve this feature. Features like Article Feedback can always be improved, so we will continue to experiment, measure, and iterate based on user and community feedback, testing, and analysis of how the feature is being used.

Rating data from the tool is available for your analysis — please dig in and let us know what you find. Toolserver developers can also access the rating data (minus personal information) in real-time to develop new dashboards and views of the data.

What We’ve Learned So Far

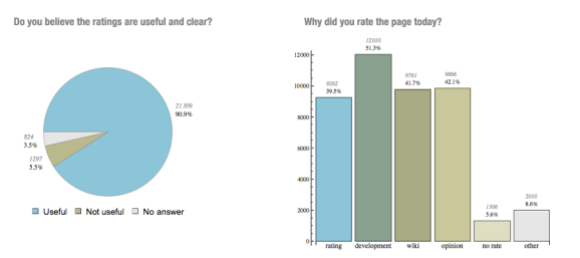

Readers like to provide feedback. The survey we’re currently running shows that over 90% of users find the ratings useful. Many of these raters see the tool as a way to participate in article development — when asked why they rated and article, over half reported wanting to “positively affect the development of the page.”

Users of the feedback tool also left some enthusiastic comments (as well as some critical ones) about the tool. For example:

The option to rate a page should be available on every page, all the time, once per page per user per day.

As a high school librarian, I want my students to assess the sources of information they use. This feature forces them to consider the reliability of Wiki articles. Glad you have it.

Ratings seem like an interesting idea, I feel like the metrics used to determine the overall value of the page are viable, and I’ll be interested to see how the feature fares when it’s rolled out and has some miles under its belt.

The vast majority of raters were previously only readers of Wikipedia. Of the registered users that rated an article, 66% had no prior editing activity. For these registered users, rating an article represents their first participatory activity on Wikipedia. These initial results show that we are starting to engage these users beyond just passive reading, and they seem to like it.

The feature brings in editors. One of the main Strategic Goals for the upcoming year is to increase the number of active editors contributing to WMF projects. The initial data from the Article Feedback tool suggests that reader feedback could become a meaningful point of entry for future editors.

Once users have successfully submitted a rating, a randomly selected subset of them are shown an invitation to edit the page. Of the users that were invited to edit, 17% attempted to edit the page. 15% of those ended up successfully completing an edit. These results strongly suggest that a feedback tool could successfully convert passive readers into active contributors of Wikipedia. A rich text editor could make this path to editing even more promising.

While these initial results are certainly encouraging, we need to assess whether these editors are, in fact, improving Wikipedia. We need to measure their level of activity, the quality of their contributions, their longevity, and other characteristics.

Ratings are a useful measure of some dimensions of quality. In its current form, the Article Feedback Tool appears to provide useful feedback on some dimensions of quality, while the usefulness of the feedback on other dimensions of quality is still an open research question. Completeness and Trustworthy (formerly “Well-Sourced”) appear to be dimensions where readers can provide a reasonable level of assessment. Research shows that ratings along these dimensions are correlated with the length and amount of citations, respectively. We need to determine whether the ratings in “Objective” and “Well-Written” meaningfully predict quality in those categories. We released public dumps of AFT data and would love to hear about new approaches of measuring how well ratings reflect article quality.

We received feedback from community members on how to improve the feature. We’ve received a fair amount of feedback from the community on the usefulness of AFT, mainly through IRC Office Hours and on the AFT discussion page. There have been many suggestions on how to make the feedback tool more valuable for the community. For example, the idea of having a “Suggestions for Improvement”-type comment box has been raised several times. Such a box would enable readers to provide concrete feedback directly to the editing community on how to improve an article. We plan to develop some kind of commenting system in the near future.

AFT could help surface problematic articles in real time, as well as articles that may qualify for increased visibility. We’ve started experimenting with a dashboard for surfacing both highly rated and lowly rated articles. Ultimately, the dashboard could help identify articles that need attention (e.g., articles that have been recently vandalized) as well as articles that might be considered for increased visibility (e.g., candidates for Featured Articles). We will continue to experiment with algorithms that help surface trends in articles that may be useful for the editing community.

Next Steps

Over the coming weeks, we will continue to roll out the Article Feedback Tool on the English Wikipedia. Once this rollout is complete, we will start planning the next version of the tool. For those interested in following the discussion, we will be documenting progress on the Article Feedback Project Page. We would love to get your feedback (pun intended!) on how the feature is being used, what’s working, and what might be changed. We also encourage folks to dig into the data. Once the feature is fully deployed, there will be mountains of data to sift through and analyze, which will be a boon to researchers and developers alike.

We’d especially like to encourage members of the community to get involved in the further development of the feature. If you’re interested in getting involved (e.g., design input, data analysis/interpretation, bug-squashing, etc.), please drop a note on the project talk page.

Howie Fung, Senior Product Manager

Dario Taraborelli, Senior Research Analyst

Erik Moeller, VP Engineering and Product Development

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation