Vol: 1 • Issue: 4 • October 2011 [archives]

WikiSym; predicting editor survival; drug information found lacking; RfAs and trust; Wikipedia’s search engine ranking justified

With contributions by: Boghog, Jodi.a.schneider, Drdee, DarTar, Phoebe and Tbayer

Wiki research beyond the English Wikipedia at WikiSym

WikiSym 2011, the “7th international symposium on wikis and open collaboration”, took place from October 3-5 at the Microsoft Research Campus in Silicon Valley (Mountain View, California). Although the conference’s scope has broadened to include the study of open online collaborations that are not wiki-based, Wikipedia-related research still took up a large part of the schedule. Several of the conference papers have already been reviewed in the September and August issues of this research overview, and the rest of the proceedings have since become available online.

The workshop “WikiLit: Collecting the Wiki and Wikipedia Literature“[1], led by Phoebe Ayers and Reid Priedhorsky, explored the daunting task of collecting the scholarly literature pertaining to Wikipedia and wikis generally. Research about wikis can be difficult to find, since there are papers published in many fields (from sociology to computer science) and in many formats, from published articles to on-wiki community documents. There have been several attempts over the years to collect the wiki and Wikipedia literature, including on Wikipedia itself, but all such projects have suffered from not keeping up to date with the sheer volume of research that is published every year. While the workshop did not reach consensus on what platform to proceed with to build a sustainable system, there was agreement that this is an important topic for the research and practitioner community, and the group developed a list of requirements that such a system should have. The workshop followed and extended discussions on the wiki-research-l mailing list earlier this year on the topic.

In a panel titled “Apples to Oranges?: Comparing across studies of open collaboration/peer production“,[2] six US-based scholars reviewed the state of this field of research. Among the takeaways were a call to study failed collaboration projects more often instead of focusing research on successful “anomalies” like Wikipedia, and – especially in the case of Wikipedia – to broaden research to non-English projects.

Another workshop, titled “Lessons from the classroom: successful techniques for teaching wikis using Wikipedia“[3] was a retrospective on the Wikimedia Foundation’s Public Policy Initiative.

Among the conference papers not mentioned before in this newsletter are:

- “Mentoring in Wikipedia: a clash of cultures” [4], a paper which “draw[s] insights from the offline mentoring literature to analyze mentoring practices in Wikipedia and how they influence editor behaviors. Our quantitative analysis of the Adopt-a-user program shows mixed success of the program”.

- “Vandalism Detection in Wikipedia: A High-Performing, Feature–Rich Model and its Reduction“[5] – arguing that on Wikipedia “human vigilance is not enough to combat vandalism, and tools that detect possible vandalism and poor-quality contributions become a necessity”, the authors present a vandalism classifier constructed using machine learning techniques.

Wikipedia-related posters included

- “A scourge to the pillar of neutrality: a WikiProject fighting systemic bias“[6] presenting preliminary findings from an ongoing survey and interviews among members of the WikiProject Countering systemic bias.

- Another poster presentation planned to analyze the contributions of the members of this WikiProject to see what kind of systemic bias they might exhibit themselves (“Places on the map and in the cloud: representations of locality and geography in Wikipedia“[7]).

- “Participation in Wikipedia’s article deletion processes“[8] found that “the deletion process is heavily frequented by a relatively small number of longstanding users” and that “the vast majority of [speedily] deleted articles are not spam, vandalism, or ‘patent nonsense’, but rather articles which could be considered encyclopedic, but do not fit the project’s standards”.

- “Exploring underproduction in Wikipedia“[9] examined “two key circumstances in which collective production can fail to respond to social need: when goods fail to attain high quality despite (1) high demand or (2) explicit designation by producers as highly important”.

Quality of drug information in Wikipedia

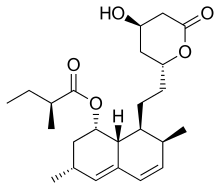

A study entitled “Accuracy and completeness of drug information in Wikipedia: an assessment”[10] in this month’s issue of the Journal of the Medical Library Association of five widely prescribed statins found that while these Wikipedia drug articles are generally accurate, they are incomplete and inconsistent. The study’s authors conclude:

| “ | Because the entries on the five most commonly prescribed statins lacked important information, the authors recommend that consumers should seek other sources and not rely solely on Wikipedia. | ” |

The main criticism by the study is that most of the articles lacked sufficient information on adverse effects, contraindications, and drug interactions and this lack of information might harm the consumer. These criticisms echo earlier ones (two similar studies reported in the Signpost: “Pharmacological study criticizes reliability of Wikipedia articles about the top 20 drugs“, ” Wikipedia drug coverage compared to Medscape, found wanting“). However the authors did note the benefit of Wikipedia hypertext links to additional information that most other web sources on drug information lack and in addition noted that all the Wikipedia articles contained references to peer reviewed journals and other reliable sources. Hence overall, the latest study is somewhat more positive than the earlier two.

Predicting editor survival: The winners of the Wikipedia Participation Challenge

The Wikimedia Foundation announced the winner of the Wikipedia Participation Challenge. The data competition, organized in partnership with Kaggle and the 2011 IEEE International Conference on Data Mining, asked data scientists to use Wikipedia editor data and develop an algorithm to predict the number of future edits, and in particular one that correctly predicts who will stop editing and who will continue to edit (see the call for submissions). The response was overwhelming, with 96 participating teams, comprising in total 193 people who jointly submitted 1029 entries (listed in the competition’s leaderboard).

The brothers Ben and Fridolin Roth (from team prognoZit) developed the winning algorithm. They developed a linear regression model using Python and GNU Octave. The algorithm used 13 features (2 based on reverts and 11 based on past editing behavior) to predict future editing activity. Both the source code and a description of the algorithm are available. Unfortunately, because it relied on patterns in the training dataset that would not be present in the actual one, the model’s ongoing use is severely restricted.

Second place went to Keith Herring. Submitting only 3 entries, he developed a highly accurate model, using random forests, and utilizing a total of 206 features. His model shows that a randomly selected Wikipedia editor who has been active in the past year has approximately an 85 percent probability of becoming inactive (no new edits) in the following 5 months. The most informative features captured both the edit timing and volume of an editor’s activity.

The challenge also announced two Honourable Mentions for participants who only used open source software. The first Honourable Mention went to Dell Zang (team zeditor) who used a machine learning technique called gradient boosting. His model mainly uses recent past editor activity. The second Honourable Mention went to Roopesh Ranjan and Kalpit Desai (team Aardvarks). Using Python and R, they too developed a random forest model. Their model used 113 features, mainly based on the number of reverts and past editor activity (see its full description).

All the documentation and source code has been made available on the main entry page for the WikiChallenge.

What it takes to become an admin: Insights from the Polish Wikipedia

A team of researchers based at the Polish Japanese Institute of Information Technology (PJIIT) published a study presented at SocInfo 2011 looking at Requests for Adminship (RfA) discussions in the Polish Wikipedia.[11] The paper presents a number of statistics about adminship in the Polish Wikipedia since the RfA procedure was formalized (2005), including the rejection rate of candidates across different rounds, the number of candidates and votes over the years and the distribution of tenure and experience of candidates for adminship. The results indicate that it was far more complicated to obtain admin status in 2010 than it was in previous years, and that tenure required to be a successful RfA candidate has soared dramatically: “the mean number of days since registration to receiving adminship is nearly five times larger than it was five years before”.

The remainder of the paper studies RfA discussions by comparing the social network of participants based on their endorsement (vote-for) or rejection (vote-against) of a given candidate with an implicit social network derived from three different types of relations between contributors (trust, criticism and acquaintance). The goal is to measure to what extent these different kinds of relations can predict voting behavior in the context of RfA discussions. The findings suggest that “trust” and “acquaintance” (measured respectively as the amount of edits by an editor in the vicinity of those by the other editor and as the amount of discussions between two contributors) are significantly higher in votes-for than in votes-against. Conversely, “criticism” (measured as the number of edits made by one author and reverted by another editor) is significantly higher in votes-against than in votes-for.

This study complements research on the influence of social ties on adminship discussions reviewed in the past edition of the research newsletter.

High search engine rankings of Wikipedia articles found to be justified by quality

An article titled “Ranking of Wikipedia articles in search engines revisited: Fair ranking for reasonable quality?”, by two professors for information research from the Hamburg University of Applied Sciences (which appeared earlier this year in the Journal of the American Society for Information Science and Technology and is now available as open access, also in form of a recent arxiv preprint[12]) addresses “the fiercely discussed question of whether the ranking of Wikipedia articles in search engines is justified by the quality of the articles”. The authors recall an earlier paper coauthored by one of them[13] that had found Wikipedia to be “by far the most popular” host in search engine results pages (in the US): In “1000 queries, Yahoo showed the most Wikipedia results within the top 10 lists (446), followed by MSN/Live (387), Google (328), and Ask.com (255)”. They then set out to investigate “whether this heavy placement is justified from the user’s perspective”. First, they re-purposed the results of a 2008 paper of the first author,[14] where students had been asked to judge the relevance of search engine results for 40 queries collected in 2007, restricting them to the search results that consisted of Wikipedia articles – all of them from the German version. They found that “Wikipedia results are judged much better than the average results at the same ranking position” by the jurors, and that

| “ | The data indicates that contrary to the assumption that Wikipedia articles show up too often in the search engines’ results, the search engines could even think of improving their results through providing more Wikipedia results in the top positions. | ” |

In order to conduct a more thorough investigation (the 2008 assessments having only focused on the criterion of relevance), the present paper sets out to develop a set of quality criteria for the evalulation of Wikipedia articles by human jurors. It first gives an overview of existing literature about the information quality of Wikipedia, and of encyclopedias in general, identifying four main criteria that several pre-2002 works about the quality of reference works agreed on. Interestingly, “accuracy” was not among them, an omission explained by the authors by the difficulty of fact-checking an entire encyclopedia. From this, the authors derive a set of 14 evaluation criteria, incorporating both the general criteria from the literature about reference works and internal Wikipedia criteria such as the status of being a featured/good article, the verifiability of the content and the absence of original research. These were then applied by the jurors (two last year undergraduate students with experience in similar coding tasks) to 43 German Wikipedia articles that had appeared in the 2007 queries, in their state at that time. While “the evaluated Wikipedia articles achieve a good score overall”, there were “noticeable differences in quality among the examples in the sample” (the paper contains interesting discussions of several strengths and weaknesses according to the criteria set, e.g. the conjecture that the low score on “descriptive, inspiring/interesting” writing could be attributed to “the German academic style. A random comparison with the English version of individual articles seems to support this interpretation”).

The authors conclude:

| “ | In general, our study could confirm that the ranking of Wikipedia articles in search engines is justified by a satisfactory overall quality of the articles. … In answer to research question 4b, 4c (‘Is the ranking appropriate? Are good entries ranked high enough?’), we can say that the rankings in search engines are at least appropriate. | ” |

Both the search engine ranking data and the evaluated Wikipedia article revisions are somewhat dated, referring to January 2007 (the authors themselves note that it “could well be that in the meantime search engines reacted to that fact [the potential of improving results by ranking Wikipedia higher] and further boosted Wikipedia results”, and also that regarding the German Wikipedia, the search engine results did not take into account possible effects of the introduction of stable versions in 2008).

Attempts to predict the outcome of AfD discussions from an article’s edit history

A master’s thesis defended by Ashish Kumar Ashok, a student in computing at Kansas State University, describes machine learning methods to determine how the final outcome of an Article for Deletion (AfD) discussion is affected by the editing history of the article.[15] The thesis considers features such as the structure of the graph of revisions of an article (based on text changed, added or removed), the number of edits of the article, the number of disjoint edits (according to some contiguity definition), as well as properties of the corresponding AfD, such as the number of !votes and the total length of words used by participants in AfD who expressed their preference to keep, merge or delete the article. Different types of classifiers based on the above features are applied to a small sample of 64 AfD discussions from the 1 August 2011 deletion log. The results of the analysis indicate that the performance of the classifiers does not significantly improve by considering any of the above features in addition to the sheer number of !votes, which limits the scope and applicability of the methods explored in this work to predict the outcome of AfD discussions. The author suggests that datasets larger than the sample considered in this study should be obtained in order to assess the validity of these methods.

In brief

- Why did Wikipedia succeed while others failed?: In a presentation on October 11 at the Berkman Center for Internet and Society (“Almost Wikipedia: What Eight Collaborative Encyclopedia Projects Reveal About Mechanisms of Collective Action“, with video), MIT researcher and Wikimedia Foundation advisory board member Benjamin Mako Hill presented preliminary results of his research comparing Wikipedia and seven other Internet encyclopedia projects or proposals that did not take off, based on interviews with the projects’ founders as well as examinations of their archives. The event was summarised for the Nieman Journalism Lab (reprinted in Business Insider), and in the Signpost: “The little online encyclopaedia that could“. Hill later gave a shorter (ca. 12min) talk about the same topic at the “Digital commons” forum (see below): video, slides.

- “Digital Commons” conference: On October 29-30, the “Building Digital Commons” conference took place in Barcelona, organized by Catalan Wikimedians and Wikimedia Research Committee member Mayo Fuster Morell, and supported by the Wikimedia Foundation. The program featured several presentations about Wikipedia research; further online documentation is expected to become available later.

- Placement of categories examined: A paper from two computer science researchers based at the Katholieke Universiteit Leuven examines the order in which categories are placed on a Wikipedia article and reports on connections between a category’s position in this list and “its persistence within the article, age, popularity, size, and descriptiveness”.[16] The order in which categories are added is not determined by any explicit rule. However, the research found, older, more persistent and more exclusive categories are consistently placed in lower positions. Categories appearing at lower positions also tend to do so across all the articles they contain and they include articles that are more similar to each other in terms of category overlap.

- Visualizing semantic data: A team from the UCSB Department of Computer Science recently presented[17] WiGiPedia, a tool visualizing rich semantic data about WIkipedia articles, designed to “inform the user of interesting contextual information pertaining to the current article, and to provide a simple way to introduce and/or repair semantic relations between wiki articles”. The tool builds on structured data represented via templates, categories and infoboxes and queried via DBpedia. By supporting collaborative editing of rich semantic data and one-click semantic updates of Wikipedia articles, the tool aims to bridge the gap between Wikipedia and DBpedia. The source code of the tool doesn’t appear to be publicly released.

- Wikipedia literature review: Owen S. Martin posted to arXiv a 28-page Wikipedia literature review towards his Ph.D. in statistics.[18] About half the paper gives an overview of Wikipedia’s database structure; the remainder reviews about 30 recent papers from the perspective of assessing their quality, trust, semantic extraction, governance, economic implications and epistemological implications.

- Vandalism detection contest: An “Overview of the 2nd International Competition on Wikipedia Vandalism Detection” has been published.[19]

- Matching Wikipedia articles to Geonames entries: A four-page paper by two researchers from Hokkaido University[20] explored the problem of “merging Wikipedia’s Geo-entities and GeoNames” to form a larger geographical database. This is already being done by the YAGO (Yet Another Great Ontology) database, but the paper uses additional data beyond the article name, such as categories and disambiguation pages on Wikipedia, in order to identify further matching pairs missed in YAGO (and in the process found several errors in GeoNames).

- Attempt to examine evolution of key activities in Wikipedia: A paper titled “Governing Complex Social Production in the Internet: The Emergence of a Collective Capability in Wikipedia”[21] (presented last month at the “Decade in Internet Time” symposium at the Oxford Internet Institute) undertakes “an exploratory theoretical analysis to clarify the structure and mechanisms driving the endogenous change of [Wikipedia]”, using the framework of capability theory to construct six hypotheses such as “the membership in group(s) of contributors that take up governance tasks varies less than in those revolving on content production”. These are then tested empirically by applying a clustering algorithm to monthly snapshots of the English Wikipedia (until 2009) “to identify distinct groupings of contributors at each month”. However, the clustering algorithm leaves out a group of users “that covers all the observed domains of activity” and “despite its relatively small share of overall contributor population … provides the majority of the work”, which leads the authors to dub it “the core editors of Wikipedia”.

References

- ↑ Ayers, Phoebe, and Reid Priedhorsky (2011). WikiLit: Collecting the wiki and Wikipedia literature. In: Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 229. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ↑ Antin, Judd, Ed H. Chi, James Howison, Sharoda Paul, Aaron Shaw, and Jude Yew (2011). Apples to oranges? Comparing across studies of open collaboration/peer production. In: Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 227. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ↑ Schulenburg, Frank, LiAnna Davis, and Max Klein (2011) Lessons from the classroom.In: Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 231. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ↑ Musicant, David R., Yuqing Ren, James A. Johnson, and John Riedl (2011). Mentoring in Wikipedia: a clash of cultures. In Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 173. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ↑ Javanmardi, Sara, David W. McDonald, and Cristina V. Lopes (2011). Vandalism detection in Wikipedia. In: Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 82. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ↑ Livingstone, Randall M (2011). A scourge to the pillar of neutrality. In: Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 209. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ↑ Livingstone, Randall M. (2011) Places on the map and in the cloud: representations of locality and geography in Wikipedia. In: Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 211. New York, New York, USA: ACM Press, 2011. DOI PDF

- ↑ Geiger, R. Stuart, and Heather Ford (2011). Participation in Wikipedia’s article deletion processes. In: Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 201. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ↑ Gorbatai, Andreea D. (2011) Exploring underproduction in Wikipedia. In: Proceedings of the 7th International Symposium on Wikis and Open Collaboration – WikiSym ’11, 205. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ↑ Kupferberg, Natalie, and Bridget McCrate Protus (2011) Accuracy and completeness of drug information in Wikipedia: an assessment. Journal of the Medical Library Association 99(4): 310-3. DOI • HTML

- ↑ Turek, Piotr, Justyna Spychała, Adam Wierzbicki, and Piotr Gackowski (2011) Social Mechanism of Granting Trust Basing on Polish Wikipedia Requests for Adminship. In: Social Informatics 2011. Lecture Notes in Computer Science, 6984:212-225. DOI

- ↑ Lewandowski, Dirk, and Ulrike Spree (2011) Ranking of Wikipedia articles in search engines revisited: Fair ranking for reasonable quality? Journal of the American Society for Information Science 62(1)): 117-132. DOI

• arxiv.org PDF

• arxiv.org PDF

- ↑ Höchstötter, Nadine, and Dirk Lewandowski (2009). What users see – Structures in search engine results pages. Information Sciences 179 (12): 1796-1812 DOI • PDF

- ↑ Lewandowski, Dirk (2008). The retrieval effectiveness of Web search engines: Considering results descriptions. Journal of Documentation 64(6), 915-937 PDF

- ↑ Ashok, Ashish Kumar (2011). Predictive data mining in a collaborative editing system: the Wikipedia articles for deletion process. HTML

- ↑ Gyllstrom, Karl, and Marie-Francine Moens (2011) Examining the “Leftness” Property of Wikipedia Categories. In: CIKM ’11. PDF

- ↑ Bostandjiev, Svetlin, John O’Donovan, Brynjar Gretarsson, Christopher Hall, and Tobias Hollerer (2011) WiGiPedia: Visual Editing of Semantic Data in Wikipedia. In: Workshop on Visual Interfaces to the Social and Semantic Web (VISSW2011), PDF

- ↑ Martin, Owen S (2011) A Wikipedia Literature Review. ArXiV, October 17, 2011. PDF

- ↑ Potthast, Martin, and Teresa Holfeld (2011) Overview of the 2nd International Competition on Wikipedia Vandalism Detection. In: PAN 2011. PDF

- ↑ Yiqi Liu, and Masaharu Yoshioka (2011) Construction of large geographical database by merging Wikipedia’s Geo-entities and GeoNames. PDF

- ↑ Aaltonen, Aleksi, and Giovan Francesco Lanzara (2011) Governing Complex Social Production in the Internet: The Emergence of a Collective Capability in Wikipedia. In Decade in Internet Time symposium. HTML

Wikimedia Research Newsletter

Vol: 1 • Issue: 4 • October 2011

This newletter is brought to you by the Wikimedia Research Committee and The Signpost

[archives] [signpost edition] [contribute] [research index]

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation