For more than a decade, we have seen how different communities all over the world dedicate efforts to Wikimedia programs: a unique expression of organized activities within the Wikimedia movement that combine both global and local factors. They share common goals, but no two programs are identical. These diverse projects ultimately seek to ensure that anybody can contribute to help build the sum of all knowledge.

As more and more of these programs are introduced and expanded across communities, we often hear the same concerns. People are torn between a desire to do more for the movement, and avoiding the potential stress associated with such goals. How can we learn to support the movement, and make an impact, without burning out?

This is where Learning and Evaluation efforts can build Wikimedia’s capacity as a movement. On May 13 and 14, Community Engagement teams at the Wikimedia Foundation collaborated with the Partnerships and Development team (ZEN) at Wikimedia Deutschland to host a series of workshops providing support to program leaders attending Wikimedia Conference 2015. Over the two days, 31 program leaders gathered to share thoughts, practices, and lessons learned, teaching them to plan and execute more effectively.

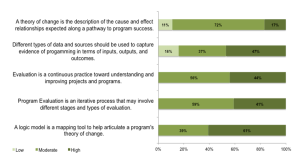

During our time together, we discussed key concepts such as logic models and the differences between inputs, outputs, and outcomes. More than 80 percent of participants left feeling they could apply these to the operation of their programs.[1] Most also left with a moderate to high level of understanding of core workshop concepts overall.

At the end of the workshop, almost nine in ten participants felt at least somewhat, or even mostly (67 percent), prepared to take back workshop concepts and tools to implement in their learning and evaluation next steps.[2] More than three-quarters said they would work to develop tools to monitor their inputs and outputs, while 63 percent will work to articulate their specific program’s impact goals and theory of change. Over half planned to develop their own logic models as a result of the workshops.[3]

Here are a few highlights of the tools and resources shared in Berlin to help you design, track and measure Wikimedia programs.

Logic models

Program leaders across the Wikimedia movement face multiple challenges. They need to plan their programs thoroughly, think through their potential impact in a well-structured way, and tell a clear story about their achievements. As a start into the pre-conference’s evaluation journey, Wikimedia Deutschland presented on logic models as a particularly helpful tool for planning and evaluating local program implementations.

Logic models sometimes may appear as a rather abstract or theoretical framework, but there’s no need to fair them. They are at the very heart of our mission, and link what our activities do and create; how program participants are affected; and changes these programs make in the long run. Thinking along this logical path makes it a lot easier to set up productive programs and to better navigate the evaluation jungle.

These models don’t have to be complicated, as demonstrated by the “staircase to impact” concept: a step-by-step template, beginning with investments, guided by expected outcomes, which finally results in an impact for the Wikimedia movement or the broader society. Check out the logic models created by participants here.

Learning from failures

The Foundation’s new director of Learning & Evaluation, Rosemary Rein, reminded us of the importance of balance with a tai chi break for workshop participants. This reflects the Wikimedia program leaders’ need to find balance between evaluation structures and tools to replicate successes; maximizing volunteer and staff resources on the one hand, and creating a safe space to experiment with new initiatives on the other. This year’s Wikimedia Conference included such a “safe space” with a “Fail Fest“, where attendees could learn from one another’s failures.

Data and tools

During the workshop, we also reviewed different data sources that can help program leaders better evaluate their efforts. Aside from user-related data on Wikimedia projects, there are many places program leaders can go in search of the materials they need to gather evidence of their program’s story – emails, meeting minutes, and talk pages, among others. This is especially relevant for program leaders who want to build their own indicators of progress or success. It is important to be creative, and to understand what matters in a local context, to put forward an appropriate story.

In order to encourage the use of data in reporting, the workshop also had a space called the “tools café”. There, we shared tools in three different categories: education, editor and content performance, and GLAM initiatives. Many participants were excited to learn about the education extension and how it can document the work of a variety of education programs. Other tools shared included Wikimetrics, GLAMorous and CatScan. While all of these tools are useful in gathering reliable data, one tool alone won’t allow much scope to tell a good story. Having a variety of different tools enables program leaders to find those that work best for their local programs, and to combine them with other useful resources to compile an engaging story.

Next steps

We asked participants in Berlin to share what was their main takeaways, and what they plan to do with the shared resources. It was rewarding to hear so many people excited to go back to their local communities and make use of these tools and resources.

We now face the challenge of supporting real use in local communities. As we continue to refine and develop tools and resources to support Wikimedia programs, leaders’ wisdom and experience is a critical success factor. We invite you to reach out to the Learning and Evaluation team through our portal on Meta: share your knowledge and discoveries through learning patterns, and give us feedback on a growing library of tools designed to support your work. Most importantly, don’t forget to have fun telling your program’s story!

María Cruz, Community Liaison, Learning and Evaluation, Wikimedia Foundation

Christof Pins, Monitoring and evaluation, Wikimedia Deutschland

- ↑ Percentage is out of 19 responses to the question on exit survey – Participants continued to struggle some with the more theoretical concept of a theory of change in which 47% reported only a basic and 41% and applied understanding.

- ↑ Percentage is out of 18 responses to the question on exit survey

- ↑ Percentage is out of 19 responses to the question on exit survey

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation