The competition is like a celebration, a festival, because all of the sudden there are a lot of new pages about the topic. Photo by Alejandro Escamilla, freely licensed under CC0 1.0.

On July 9, 2004, the winner of the first Wikipedia writing contest was awarded a bottle of champagne. During this “Essay Contest” on the Dutch Wikipedia, participants were challenged to write the best article on any topic; a three member jury chose a winning article. Since then, writing contests have sprouted throughout the Wikimedia projects, with participants from all over the world creating and improving articles in many languages. Here are just a few examples of successful contests:

- The 2014 Producer Prize contest on Arabic Wikipedia had participants set personal goals to create 1,802 articles in 6 months

- The 2013 Iberoamerican Women contest on Spanish Wikipedia worked to increase information about Iberoamerican women, working on 915 articles in 6 months

- The 2013 WikiBio contest on Ukraine Wikipedia asked participants to work in groups and create 47 quality articles in biology in one month

Many communities have adopted this online event with different goals and different successes, so we asked three basic questions about how we can help this natural growth:

- How can we capture and share the knowledge about writing contests?

- What are best practices for contests so they can be more easily replicated and repeated with success?

- How can we better understand their impacts on the community or on wiki projects?

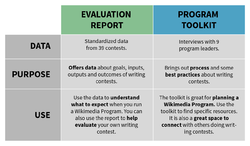

In the spirit of these questions, the Learning and Evaluation team at Wikimedia Foundation worked with 17 community members to capture data about 39 writing contests. We also reached out to interview a variety of program leaders that had hosted successful contests. Today we are happy to announce the publication of a new writing contest evaluation report and the launch of a program toolkit.

The Writing Contest Evaluation Report

The Writing Contest Report shares data with the aim of growing community knowledge about contests. Even with a small sample, the report provides a descriptive analysis that program leaders can use for planning contests. The average contest had 4 participants, 64,712 characters added, and 25 articles created or improved per week. As a tool for growing community, writing contests appear to have high retention rates compared to some measures of editor retention. Using our standard user metric, about 18% of new users and 83% of existing users made at least one edit three months after the start date of the contests. Also, contest designs seem to vary widely. Some of the elements that influence the design include contest length, proportion of new users, and goals for editing articles.

| Average Contest (per week) |

4 participants | 64,712 characters/bytes added | 25 articles created or improved |

| All 39 Contests | 745 participants | 23 million characters | 15,000 articles |

Report snapshot video, by Edward Galvez, freely licensed under CC BY-SA 4.0.

Our report is a great resource for planning future writing contests. The range of data on inputs, outputs and outcomes can tell you what is generally a high or low number for each measure. All of the data that went into the report is included in tables in the appendix. Find contests similar to your area to help plan your own writing competition. If you never organized an event like this on wiki, and would like to learn what to expect from a contest, this is the place to start. Likewise, if you have been organizing writing competitions for a while, you can learn more about how this program develops in other contexts, and connect to program leaders who coordinate them.

Our report is a great resource for planning future writing contests. The range of data on inputs, outputs and outcomes can tell you what is generally a high or low number for each measure. All of the data that went into the report is included in tables in the appendix. Find contests similar to your area to help plan your own writing competition. If you never organized an event like this on wiki, and would like to learn what to expect from a contest, this is the place to start. Likewise, if you have been organizing writing competitions for a while, you can learn more about how this program develops in other contexts, and connect to program leaders who coordinate them.

More data and more measures are key to developing a deeper understanding about writing contests and their outcomes. We encourage program leaders to continue using tools and resources to capture data about their programs.

The Writing Contest Program Toolkit

The writing contest toolkit is the second in a series of program guides on how to implement more effective Wikimedia programs. It connects data from the program reports and experience from 9 contest coordinators to highlight key success strategies. Interviews with contest coordinators revealed fascinating information about how communities use contests to identify and work toward shared goals as well as strategies they use to engage new users and reinforce community values around collaboration and trust.

The newest toolkit includes a few design improvements: quick-start contest planning templates featured on the start page, a question forum, and gallery of contest experts, among others. As this is the only space across the wikis for people interested in writing contests to connect with each other, these additions seemed useful.

We have identified 5 different contest types:

- Short term, specific topics

- High edit volume

- Content gap

- Long term, general topic or action

- Cross-wiki or regional contests

Users who are new to writing contests can now find templates to plan according to the goals they want to achieve. Also included are bots and tools to help execute the contest, information on jury composition, and scoring systems, among other aspects of planning and running a contest.

This toolkit complements the evaluation report offering a practical guide, focused on implementation. Both products work together to advance movement-wide understanding of writing contests, their design, and their impact. Program leaders have a key role to play in this enterprise as we work to build out the toolkit through shared experiences, success and challenges.

Join the conversation!

As we adapt our product designs to best serve community members’ needs, we continue to reach out to program leaders for peer-review and input on usability, among other aspects. We want to thank all the wikimedians who have already engaged and wish to encourage more to do so.

There are many ways in which you get involved. With so much data in our hands, there are many possibilities!

- Let us know what would be of interest on the report’s talk page.

- Connect with program leaders on the toolkit and make this a real learning space for; ask questions and find help from experienced wikimedians in the Forum.

- Share your knowledge directly through learning patterns or adding guidance and helpful links directly to the toolkit.

- Finally, pass the toolkit on to other writing contest enthusiasts across the wikis.

Happy editing!

María Cruz, Learning and Evaluation Communications and Outreach Coordinator, Wikimedia Foundation

Edward Galvez, Program Evaluation Associate, Wikimedia Foundation

Kacie Harold, Learning and Evaluation Analyst, Wikimedia Foundation

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation