Like crystals, Wikipedia articles have many different quality classes. Drawing by Edward Dana and James Dana via the British Library, public domain/CC0.

Like crystals, Wikipedia articles have many different quality classes. Drawing by Edward Dana and James Dana via the British Library, public domain/CC0.

Wikipedia articles are generally of high quality,[1] but when did they achieve their current quality level? What parts of Wikipedia are high quality and when did that happen?

In the past, explorations into article quality trends in Wikipedia have been complex and difficult to pursue due to the unpredictability of when articles are re-assessed. Due to the manual assessment process, article quality assessments tend to lag behind the real quality of an article. Based on our models, English Wikipedia has about five times more of the highest quality articles than we previously thought!

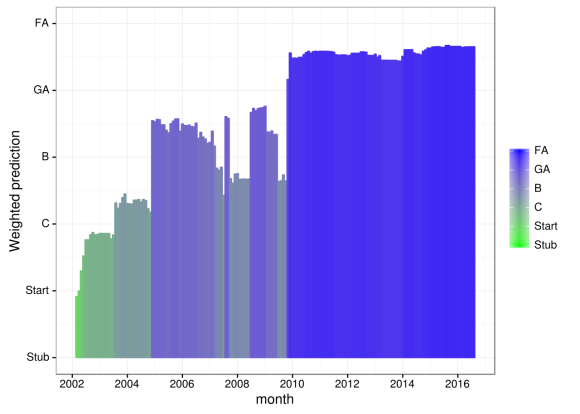

The growth of biology: article quality predictions for the English Wikipedia’s article on biology are plotted over time. Graph by Aaron Halfaker, CC BY-SA 4.0.

The growth of biology: article quality predictions for the English Wikipedia’s article on biology are plotted over time. Graph by Aaron Halfaker, CC BY-SA 4.0.

Today, we’re announcing the release of a dataset that captures trends in Wikipedia article quality. In order to pave the way for studies of quality dynamics in Wikipedia, we’ve generated and published a dataset containing article quality scores for all article-months since January 2001. Each row has an article quality prediction based on a content-based machine classifier, inspired by a paper in Computer Supported Cooperative Work,[2] and hosted by ORES. We’ve managed to build high quality prediction models for English-, French-, and Russian-language Wikipedias and have generated datasets for each of those wikis, totaling 670 million data points.

The data is current as of August 2016. We plan to expand to new wikis and to run updates periodically.

Here’s the citation for the data itself.

Halfaker, Aaron (2016): Monthly Wikipedia article quality predictions. figshare.

doi.org/10.6084/m9.figshare.3859800

Retrieved: 00 56, Oct 12, 2016 (GMT)

The files are compressed tab-separated values with the following columns:

- page_id – The page identifier

- page_title – The title of the article (UTF-8 encoded)

- rev_id – The most recent revision ID at the time of assessment

- timestamp – The timestamp when the assessment was taken (YYYYMMDDHHMMSS)

- prediction – The predicted quality class (“Stub”, “Start”, “C”, “B”, “GA”, “FA”, …)

- weighted_sum – The sum of prediction weights assuming indexed class ordering (“Stub” = 0, “Start” = 1, …)

ORES—the Objective Revision Evaluation System—is a web service providing machine-generated scores of article and edit quality. ORES is used by tool developers and researchers to visualize and analyze Wikipedia content: the Wiki Education Foundation is using ORES scores to visualize the impact of student contributions to Wikipedia articles. See ORES’ API documentation for more details.

Footnotes

[1] Mesgari, M., Okoli, C., Mehdi, M., Nielsen, F. Å., & Lanamäki, A. (2015). “The sum of all human knowledge”: A systematic review of scholarly research on the content of Wikipedia. Journal of the Association for Information Science and Technology, 66(2), 219-245. https://doi.org/10.1002/asi.23172.

[2] Warncke-Wang, M., Ayukaev, V. R., Hecht, B., and Terveen, L. “The Success and Failure of Quality Improvement Projects in Peer Production Communities”, CSCW ’15. PDF. https://doi.org/10.1145/2675133.2675241.

Aaron Halfaker, Principal Research Scientist, Wikimedia Foundation

Amir Sarabadani, Wikimedia Germany (Deutschland)

Dario Taraborelli, Director, Head of Research, Wikimedia Foundation

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation