Few people have faced the dangerous consequences of unresolved conflict as personally as Ingrid Betancourt did in 2002, when the then-presidential candidate was kidnapped by the Revolutionary Armed Forces of Colombia (FARC) for six years. She now encourages others to protect free knowledge and credible sources as an advocate for peace.

Betancourt recently told the Wikimedia Foundation that she believes that “values are important in the spreading of free knowledge. … Fake news is dangerous. Spinning the news is very dangerous. You can distort information to obtain a result.” One of the biggest threats to trustworthy information starts with how people evaluate sources (read how Wikipedians do it). Some may focus on content created with a profit motive, others point to government-controlled propaganda, while others say the problem starts with fake news (the subject of a recent discussion at Yale University attended by members of the Wikimedia legal team).

With so many different aspects to focus on (or neglect), misinformation threatens to delay all kinds of efforts to strengthen trustworthy knowledge across political and social divides.

Thinking about misinformation in two ways: content and access

As part of the Wikimedia 2030 strategy process, researchers at Lutman and Associates and Dot Connector Studio assessed over one hundred reports, articles, and studies to review how misinformation threatens the future of free knowledge. The assessments include dozens of powerful examples of how misinformation can have far-reaching and devastating consequences.

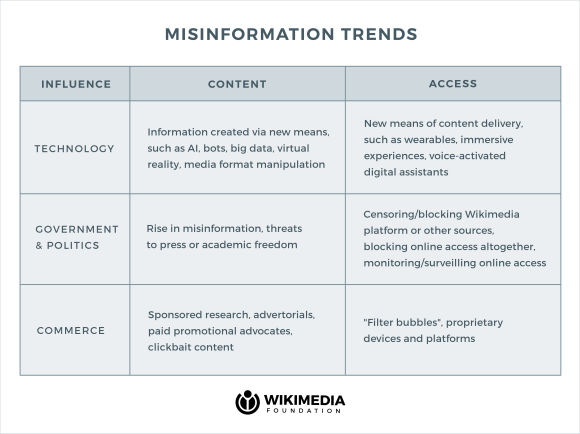

The researchers had a big task set out for them, so they divided and conquered the broad scope of misinformation trends by splitting the matter into two categories: first, a “content” category which focuses on trends that affect sources used by Wikimedians to develop reliable information and an “access” category, which refers to “how and whether Wikipedia users are able to use the platform.” Their framework allows for the comparison of different sources (technology, government/politics, and commerce) which fuel disruption of verifiable source usage and access to trustworthy information.

Consider how the sources of reliable information (in this framework, that’s the content category) is influenced by misinformation that is created and/or shared by governments and political groups, for example:

| “ | This spread of misinformation online is occurring despite recent growth in the number of organizations dedicated to fact-checking: world-wide, at least 114 “dedicated fact-checking teams” are working in 47 countries. Looking into the future, what’s safe to expect? First, global freedom of expression will wax and wane depending on national and international political developments. Less clear is whether global trends toward autocracy will continue—or whether free societies will have a resurgence, grappling successfully with pressures on the press and academy, and the politicization of facts as merely individual biased perspectives. Second, we can expect that politically motivated disinformation and misinformation campaigns will always be with us. Indeed, the phenomenon of “fake news,” misinformation, or “alternative facts” can be traced to some of the earliest recorded history, with examples dating back to ancient times. The Wikimedia movement will need to remain nimble and editors become well-versed in the always-morphing means by which information can be misleading or falsified. It will be helpful to keep abreast of techniques developed and used by journalists and researchers when verifying information, such as those described in the Verification Handbook, available in several languages. |

” |

While they were tasked to inform the Wikimedia community about the prospects for future trustworthy knowledge, the researchers’ insights may also provide materials and insights for discussions held by other professionals challenged with misinformation and falsified materials, including researchers and academics, journalists, policy-makers and thinkers.

For Betancourt, ensuring “equality among human beings” requires us to talk to people we disagree with. While it may appear to be a counter-intuitive method, finding common ground is an essential part of establishing the guidelines of trustworthy knowledge-sharing in politically toxic environments. In her experience as a real-life hostage, “not talking or refusing to communicate… was a worse attitude than communicating.”

How can the Wikipedia community combat misinformation and censorship in the decades to come? The researchers offered their own suggestions, and we invite you to join us to discuss the challenges posed by this and other research.

Margarita Noriega, Strategy Consultant, Communications

Wikimedia Foundation

Chart by Blanca Flores/Wikimedia Foundation, CC BY-SA 4.0.

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation