Imagine a survey designed to hear from Wikimedia contributors, people from every project and affiliate, used to influence the Wikimedia Foundation’s program strategies.

Welcome to Community Engagement Insights.

In 2016, the Wikimedia Foundation initiated Community Engagement Insights, under which we designed a Wikimedia contributors and communities survey, which cultivates learning, dialogue, and improved decision making between the Foundation and multiple community audiences. Foundation staff designed hundreds of questions and organized them into a comprehensive online survey. We then sent it to many different Wikimedians, including editors, affiliates, program leaders, and technical contributors. After completing our basic analysis, we now want to share what we learned, and what we are going to do with it.

On Tuesday, October 10, at 10 am PST/5 pm UTC, we will hold a public meeting to present some of the data we found, how different teams will use it, offer guidance on how to navigate the report, and open the space for questions. You can join the conversation on a YouTube livestream, asking question via IRC on #wikimedia-office.

How are these surveys different from others?

Looking at the history of surveys at the Foundation, we did not have a systematic approach to hearing input and feedback from communities about the Foundation’s programs and support. For example, between 2011 and 2015, there were only four comprehensive contributors surveys:[1] three Wikipedia Editor surveys, and one Global South User Survey. Between 2015 and 2016, however, we witnessed a growing demand for community survey data. In that year alone, there were ten different surveys requested by ten different teams. While the first four surveys were exploratory, to learn from users about broad themes, newer surveys were looking for more specific feedback on projects and initiatives. However, individual teams didn’t have a structure to approach this type of inquiry for international audiences, nor was there a system in place for the foundation to hear from the communities it serves on a regular basis.

This is when we started to think about a collaborative approach to surveying communities. At the beginning of the Community Engagement Insights project, we interviewed teams to learn about the specific audience groups they worked with, and what information they wanted to learn from them. Understanding that the need for community survey data would continue to grow, we started thinking of a systematic and shared solution that could respond to support this emergent demand. The Wikimedia Contributors and Communities Survey was the answer. It has three key characteristics:

- It is an annual iteration: The Wikimedia Contributors and Communities Survey is held year over year to observe change over time.

- There is a submission process: Participating teams can participate, and submit survey questions they would like answered.

- It is a collaborative effort: Survey expertise is spread across the organization, so people from different teams, especially those who have submitted questions, collaborate also not only on survey design, sampling and analysis, but also outreach, messaging, translation and communication.

Towards the end of the survey design process, 13 teams had submitted 260 questions, aimed at 4 audience groups: editors or contributors, affiliates, program leaders, and developers (also known as technical contributors).

What did we learn about Wikimedia communities in 2016, and how we serve them?

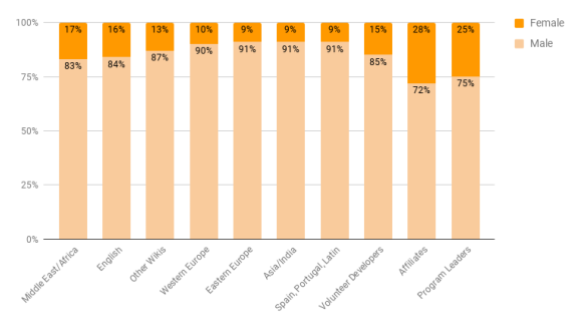

Male to female ratios.

Graphic from CE Insights 2016-17 report.

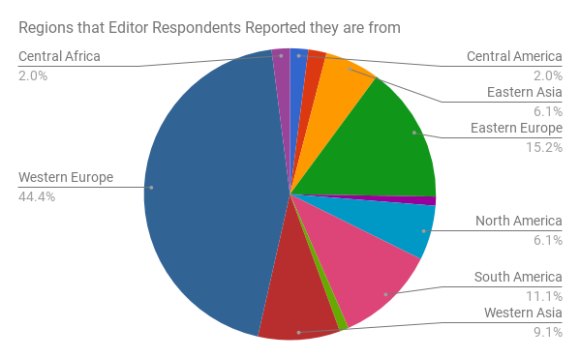

Regions editor respondents reported they come from.

Graphic from CE Insights 2016-17 report.

In terms of response rates, we had 26% (4,100) response rate from editors, 53% (127) response rate from affiliates, and 46% (241) response rate from program leaders.[2] Volunteer developers were not sampled, and we got 129 responses from that audience group.

For Wikimedia Foundation programs that are community-facing, we collected data on three different areas. Personal information allowed us to understand the personal characteristics of the communities we serve, such as gender and demographics. Here, we found that while the percentage of women contributors is still below 15% across all regions, the number is higher when it comes to leadership roles: 25% of program leaders are women, as well as 28% of affiliate representatives. The majority of editors across all projects come from Western Europe (44%), followed by Eastern Europe (15%), South America (11%), and Western Asia (9%).[3]

The Wikimedia Contributors and Communities Survey also had questions about Wikimedia environments—the spaces that we are trying to make an impact on as a movement, such as the Wikimedia projects, software, or affiliates. Looking at the projects environment, we learned that 31% of all survey participants have felt uncomfortable or unsafe in Wikimedia spaces online or offline. Also, 39% agree or strongly agree that people have a difficult time understanding and empathizing with others. When rating issues on Wikipedia, the top three were vandalism, the difficulty in gaining consensus on changes, and the amount of work that goes undone. While 72% of the survey participants claim to be satisfied or very satisfied with the software they use to contribute, 20% reported being neither satisfied nor dissatisfied.

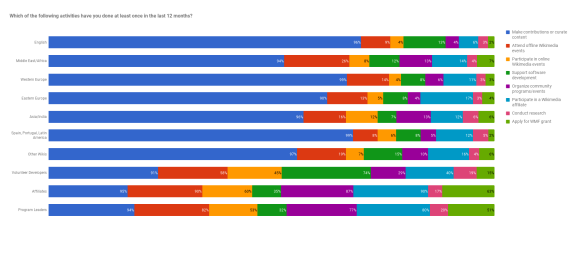

Activities in the last 12 months.

Graphic drawn from CE Insights 2016-17 report.

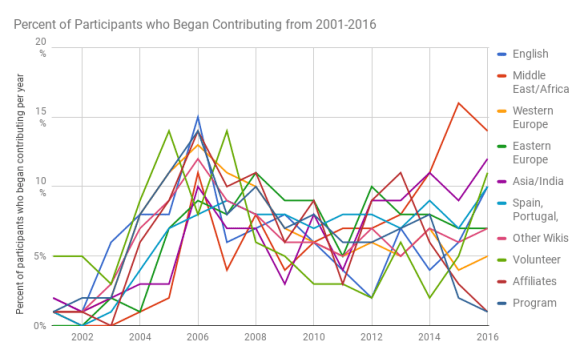

Percent of participants who began contributing from 2001–2016.

Graphic drawn from CE Insights 2016-17 report.

Wikimedia Foundation programs is the third area we explored. Programs include Annual Plan programs that are aiming to achieve a certain goal, like New Readers or Community Capacity Development, and also other regular workflows such as improving collaboration and communication. Focusing on this goal, the Support and Safety team at the Foundation offers services to support Wikimedians. They learned that an average of 22% of editors across regions had engaged with staff or board of the Foundation. Regionally, the Middle East and Africa represented the highest rate of engagement, at 37%.

These are only very few highlights. The full report has hundreds more data points in all three areas, about community health, software development, fundraising, capacity development, brand awareness, and collaboration, among other topics and programs.

Get involved!

Since each Foundation team had specific questions that were tailored for particular initiatives and projects, each group is now in the process of analyzing the data they received. The end goal is to use this information for data-driven direction and annual planning. Some teams will be sharing their takeaways in the Tuesday, October 10 meeting.

We will continue to work with community organizers that can help spread the word about the survey, and engage more community members in taking the survey next year. If you are interested in joining this project, please email eval[at]wikimedia[dot]org.

María Cruz, Communications and Outreach manager, Community Engagement

Edward Galvez, Survey specialist, Community Engagement

Wikimedia Foundation

Footnotes

- “Community Surveys at the Foundation“, Community Engagement Insights, presentation at Wikimania 2017.

- See more about our sampling strategy on Commons.

- These numbers are heavily influenced by the sampling strategy and do not necessarily represent the population of editors.

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation