Wikipedia articles are missing images, and Wikipedia images are missing captions. A scientific competition organized by the Research team at the Wikimedia Foundation could help bridge this gap. The WMF is also releasing a large image dataset to help researchers and practitioners build systems for automatic image-text retrieval in the context of Wikipedia.

It’s often said that an image is worth a thousand words, but for the millions of images and billions of words on Wikipedia, this idiom doesn’t always apply. Images are essential for knowledge diffusion and communication, but less than 50% of Wikipedia articles are illustrated at all! Moreover, images on articles are not stand-alone pieces of knowledge: they often require large captions to be properly contextualized and to support meaning construction.

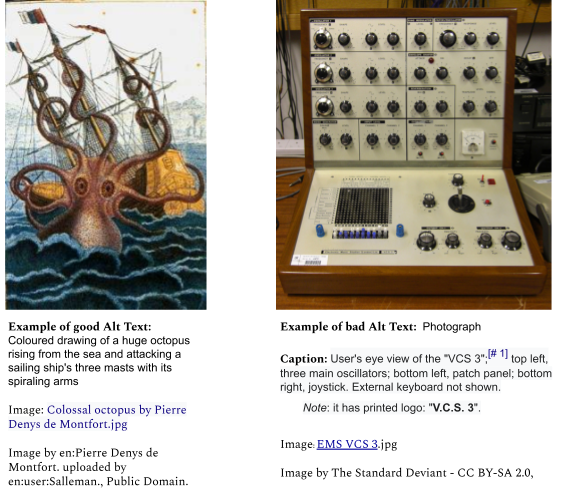

More than 300M people in the world have visual impairments, and billions of people in the Global South with limited internet access would benefit from text-only documents. These groups rely entirely on the descriptive text to help contextualize images in Wikipedia articles. But only 46% of images in English Wikipedia come with a caption text, and only 10% have some form of alt-text, with 3% having an alt-caption that is appropriate for accessibility purposes. This lack of contextual information not only limits the accessibility of visual and textual content on Wikipedia, but it also affects the way in which images can be retrieved and reused across the web.

Several Wikimedia teams and volunteers have successfully deployed algorithms and tools to help editors fix the problem of lack of visual content on Wikipedia articles. While very useful, these methods have limited coverage. An average of only 15% of articles find good candidate image matches.

Existing automated solutions for image captioning are also difficult to incorporate in editors’ workflows: the most advanced computer vision-based image to text generation methods aren’t suitable for the complex, granular semantics of Wikipedia images and are not generally available for languages other than English.

The Wikipedia Image/Caption Matching Competition on Kaggle

As part of our initiatives to address Wikipedia’s knowledge gaps, we are organizing the “Wikipedia Image/Caption Matching Competition.” We are inviting communities of volunteers, developers, data scientists, and machine learning enthusiasts to help us solve the hardest problems in the image space.

The “Wikipedia Image/Caption Matching Competition” is designed to foster the development of systems that can automatically associate images with their corresponding image captions and article titles. The Research Team at the Wikimedia Foundation will be hosting the competition through Kaggle starting September 9th, 2021. This competition was made possible thanks to collaborations with Google Research, EPFL, Naver Labs Europe, and Hugging Face, who massively helped with the data preparation and the competition design. Given the highly novel, open, and exploratory nature of the challenge proposed, the first edition of the competition comes in a “playground” format.

Participation is completely online and open to anyone with access to the internet. In this competition, participants will be provided with content from Wikipedia articles in 100+ language editions. They will be asked to build systems that automatically retrieve the text (an image caption, or an article title) closest to a query image. The best models will account for the semantic granularity of Wikipedia images and operate across multiple languages.

The collaborative nature of the platform helps lower barriers to entry and encourages broad participation. Kaggle is hosting all data needed to get started with the task, example notebooks, a forum for participants to share and collaborate, and submitted models in open-sourced formats. With this competition, we hope to provide a fun and exciting opportunity for people around the world to grow their technical skills while contributing to one of the largest online collaborative communities and the most widely used free online encyclopedia.

A large dataset of Wikipedia image files and features

Participants will work with one of the largest multimodal datasets ever released for public usage. The core training data is taken from the Wikipedia Image-Text (WIT) Dataset, a large curated set of more than 37 million image-text associations extracted from Wikipedia articles in 108 languages that was recently released by Google Research.

The WIT dataset offers extremely valuable data about the pieces of text associated with Wikipedia images. However, due to licensing and data volume issues, the Google dataset only provides the image name and corresponding URL for download and not the raw image files.

Getting easy access to the image files is crucial for participants to successfully develop competitive models. Therefore, today, the Wikimedia Research team is releasing its first large image dataset. It contains more than six million image files from Wikipedia articles in 100+ languages, which correspond to almost1 all captioned images in the WIT dataset. Image files are provided at a 300-px resolution, a size that is suitable for most of the learning frameworks used to classify and analyze images. The total size of the dataset released stands around 200GB, partitioned into 200 files of around 1GB.

With this large release of visual data, we aim to help the competition participants—as well as researchers and practitioners who are interested in working with Wikipedia images—find and download the large number of image files associated with the challenge, in a compact form.

While making the image files publicly available is a first step towards making Wikipedia images accessible to larger audiences for research purposes, the sheer size of the raw pixels makes the dataset less usable in lower-resource settings. To improve the usability of our image data, we are releasing an additional dataset, containing an even more compact version of the six million images associated with the competition. We compute and make publicly available the images’ ResNet-50 embeddings. We describe each image with a 2048-dimensional signature extracted from the second-to-last layer of a ResNet-50 neural network trained with Imagenet data. These embeddings contain rich information about the image content and layout, in a compact form. Images and their embeddings are stored on Kaggle, and on our Wikimedia servers.

Here is some sample PySpark code to read image files and embeddings:

# File Format:

## Pixels columns: image_url, b64_bytes, metadata_url

### b64_bytes are the image bytes as a base64 encoded string

## Embedding columns: image_url, embedding

### Embedding: a comma separated list of 2048 float values

# embeddings

@F.udf(returnType='array<float>')

def parse_embedding(emb_str):

return [float(e) for e in emb_str.split(',')]

# parse embedding array

first_emb = (spark.read

.csv(path=resnet_embeddings_training+'*.csv.gz',sep="t")

.select(F.col('_c0').alias('image_url'), parse_embedding('_c1').alias('embedding'))

.take(1)[0]

)

print(len(first_emb.embedding))

# 2048

# pixels

first_image = (spark

.read.csv(path=image_pixels_training+'*.csv.gz',sep="t")

.select(F.col('_c0').alias('image_url'), F.col('_c1').alias('b64_bytes'),F.col('_c2').alias('metadata_url'))

.take(1)[0]

)

# parse image bytes

import base64

from io import BytesIO

from PIL import Image

pil_image = Image.open(BytesIO(base64.b64decode(first_image.b64_bytes)))

print(pil_image.size)

# (300, 159)This is an initial step towards making most of the image files publicly available and usable on Commons in a compact form. We are looking forward to releasing an even larger image dataset for research purposes in the near future!

We encourage everyone to download our data and participate in the competition. This is a novel, exciting, and complex scientific challenge. With your contribution, you will be advancing the scientific knowledge on multimodal and multilingual machine learning. At the same time, you will be providing open, reusable systems that could help thousands of editors improve the visual content of the largest online encyclopedia.

Acknowledgements

We would like to thank everyone who contributed to this amazing project, starting with our WMF colleagues: Leila Zia, head of Research, for believing in this project and for overseeing every stage of the process, Stephen La Porte and Samuel Guebo who supported the legal and security aspects of the data release, Ai-Jou (Aiko) Chou for the amazing data engineering work, Fiona Romeo for the data about alt text quality, and Emily Lescak and Sarah R. Rodlund for helping with the release of this post.

Huge thanks to the Google WIT authors (Krishna Srinivasan, Karthik Raman, Jiecao Chen, Michael Bendersky, and Marc Najork) for creating and sharing the database, and for collaborating closely with us on this competition, and to the Kaggle team (Addison Howard, Walter Reade, Sohier Dane) who worked tirelessly for making the competition happen.

All this would not have been possible without the valuable suggestions and brainstorming sessions with an amazing team of researchers from different institutions: thank you Yannis Kalantidis, Diane Larlus, and Stephane Clinchant from Naver Labs Europe; Yacine Jernite from Hugging Face, and Lucie Kaffee from the University of Copenhagen, for your excitement and dedication to this project!

Footnotes

- We are publishing all images having a non-null “reference description” in the WIT dataset. For privacy reasons, we are not publishing images where a person is the primary subject, i.e., where a person’s face covers more than 10% of the image surface. To identify faces and their bounding boxes, we use the RetinaFacedetector. In addition, to avoid the inclusion of inappropriate images or images that violate copyright constraints, we have removed all images that are candidate for deletion on Commons from the dataset.

Originally published by Miriam Redi, Fabian Kaelin and Tiziano Piccardi on the Wikimedia Techblog on 9 September 2021.

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation