Our vision is a world in which every single human being can freely share in the sum of all knowledge. Machine translation has the potential to help us achieve that vision by enabling more people to contribute content to Wikipedia in their native or preferred languages.

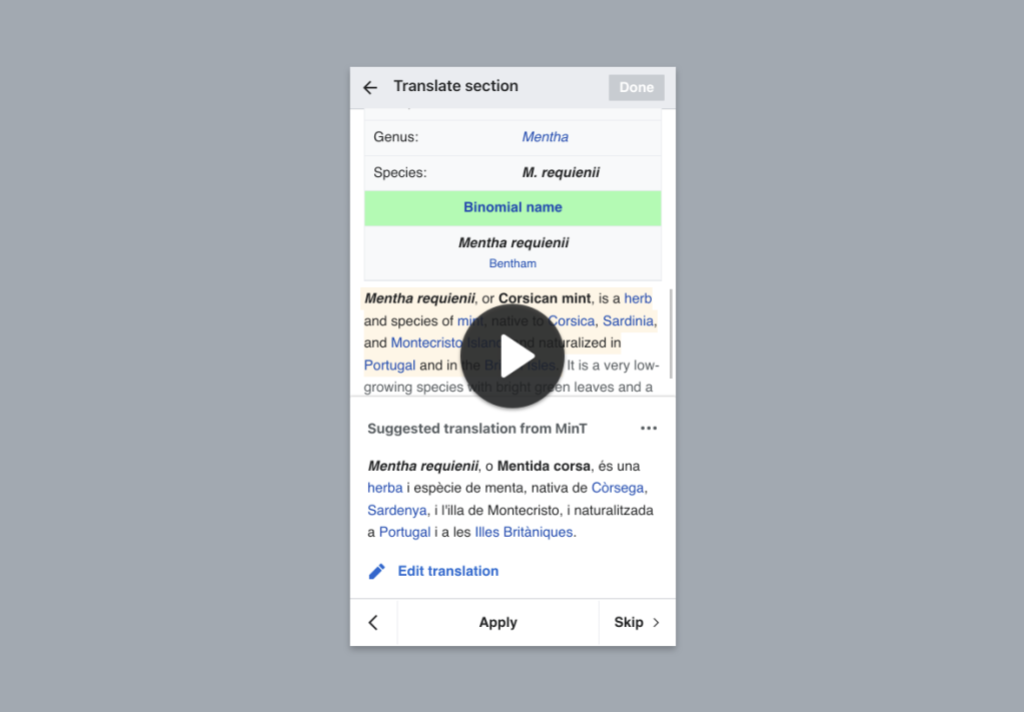

Content Translation, the tool used by Wikipedia editors to translate over one and a half million articles, uses machine translation as a starting point when it is available, making sure to keep humans in the loop by encouraging them to improve the initial translation and controlling how much it is edited. In this case, providing automation while keeping the humans in control helps Wikipedia editors to become more productive while producing quality content. However, not all languages have good quality machine translation available for editors to benefit from.

We are launching MinT in order to expand the current machine translation support. MinT (“Machine in Translation”) is a new translation service by the Wikimedia Foundation Language Team that is based on open source neural machine translation models and supports over 200 languages. The service is hosted in Wikimedia Foundation infrastructure, and it runs translation models that have been released by other organizations with an open source license.

[Source of the video]

MinT is designed to provide translations from multiple machine translation models. Initially it uses the following models:

- NLLB-200. The latest model from the No Language Left Behind project by a research team at Meta. This model supports translation across 200 languages, including many that are not supported by other vendors. An initial pilot was conducted in collaboration with the research team at Meta to support a small set of languages, and we obtained very positive feedback about the translation quality provided by this model. Gradually, we have been enabling the NLLB-200 model for the translation of Wikipedia articles in more languages.

- OPUS. The OPUS (Open Parallel Corpus) project from the University of Helsinki compiles multilingual content with a free license to train a translation model. The availability of open source cross-language resources may be limited for many languages, but the integration with Wikimedia tools enables a fully open cycle of improvement: translated Wikipedia articles are incorporated into the OPUS repository as new resources to improve the translation quality for the next version of the model.

- IndicTrans2. The IndicTrans2 project provides translation models to support over 20 Indic languages. These models were developed by AI4Bharat@IIT Madras, a research group at the Indian Institute of Technology Madras. The models were created with the support of the Digital India Bhashini Mission, an initiative from the Ministry of Electronics and Information Technology (MeitY) of India to advance the state of Indic languages using the latest technologies; and Nilekani Philanthropies.

- Softcatalà. Softcatalà is a non-profit organization with the goal to improve the use of Catalan in digital products. As part of the Softcatalà Translation project, translation models used in their translator service to translate 10 languages to and from Catalan have been released.

The translation models used by MinT support over 200 languages, including many underserved languages that are getting machine translation for the first time. For example, the recent integration of NLLB-200 model into the Content Translation tool supported machine translation for Fula, spoken by 25+ million people, for the first time.

Translation quality for MinT depends on the data available for the supported languages. Thus, it may be initially low for languages with low resources. However, the integration with our tools enables a fully open cycle of improvement: translated Wikipedia articles are incorporated into the Opus repository as new resources to improve the translation quality for the next version of the model. That is, editors can help the machine translation to become better by translating Wikipedia articles and fixing the mistakes it makes. There are also other ways in which language communities can contribute to the Opus project or other open communities such as Tatoeba whose contents are also incorporated into the translation models.

MinT is available in Content Translation for 155 languages (44 of them with MinT as the only machine translation option available), and we plan to keep expanding the language support. You can try MinT when translating a Wikipedia article in those languages, on desktop or mobile. You can read more about MinT and share your feedback in the project summary page. If you are interested in setting-up your own translation system, the code for MinT is also available.

Why another translation service?

Content Translation already integrates several translation services. In 2014 with the launch of the Content Translation tool, the tool focused on translations from Spanish to Catalan both due to the dedication of these editor communities and because of an integration with the open-source machine translation system Apertium. Apertium supports 43 languages and works very well for closely-related languages. However, contributing to the project in order to expand the language support requires advanced linguistic expertise to encode the specific rules of a language.

Since then, we have worked with Wikimedia communities and external partners to expand the translating experience for Wikipedia editors and incorporate a number of machine translation services into the Content Translation tool. The Content Translation tool makes this space possible by integrating open source systems like an Apertium instance, alongside open API clients from otherwise-closed platforms such as Google Translate. Each of these models adds unique value to the Content Translation tool – additional languages, more translation options for existing languages, or often both.

In this context, with MinT we can provide, for the first time, a translation service that is open, is based on the latest machine-learning techniques, and covers a broad range of languages with a quality level that is comparable to the proprietary services. For example, the NLLB-200 model in particular, can support over 200 languages, including Wikipedias in 44 languages for which machine translation was not available. Furthermore NLLB-200 also supports 23 additional languages for which there is no Wikipedia yet. This represents a total of 67 languages without machine translation support that can experience automatic translations with MinT for the first time.

In addition, MinT represents an improvement in translation quality for several other languages that are already covered by commercial services. For example, feedback from Icelandic and Igbo speakers indicated their preference for NLLB-200 (known as Flores at the time) as a higher quality option available for their languages. An Icelandic Wikipedian wrote: “Flores is usually better than Google Translate for Icelandic”; Igbo Wikipedians wrote: “Flores is better and should be set as default” and “Flores is more easy and best to use in translations”.

After an initial pilot, we expanded the support for an additional set of languages, giving priority to underserved languages without other options to translate. Input from Wikimedia communities has been extremely useful. Wikipedia editors from different Wikipedia communities such as Kashmiri, Santali, or Tumbuka have expressed their interest in the new machine translation capabilities. At the same time, Cantonese Wikipedia editors preferred not to have access to translations using the NLLB-200 model since the dataset used to train the model was based on a variant of the language that was not useful for the Cantonese Wikipedia.

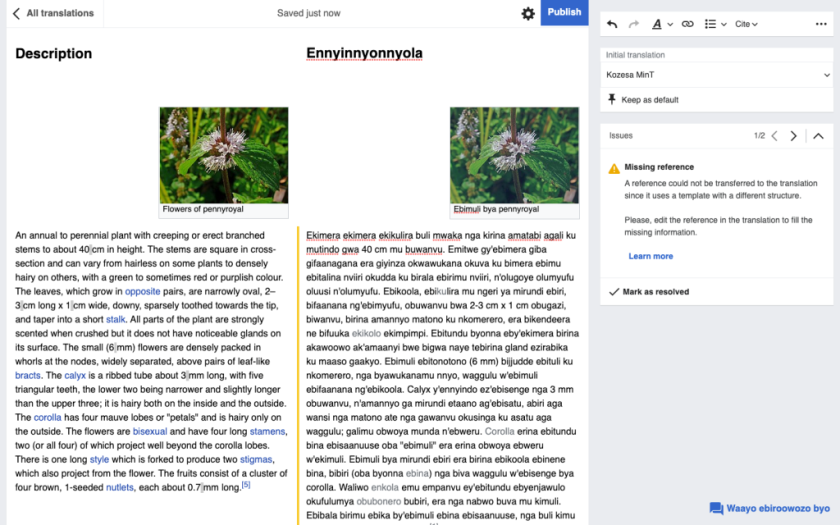

[Source of the image]

A long journey

Creating MinT has been a long journey expanding for about four years. This process started with the initial explorations of OPUs models in 2019 and completes the next chapter in 2023 with the release of MinT.

In January 2019 we connected with the OpusMT project for integration with Content Translation. The Language team worked with the project and contributed to their codebase to help deployment automation and API endpoints. Based on this collaboration, the Language team co-authored a paper with the Opus-MT team.

A test instance was enabled and integrated in Content Translation with support for Assamese, Central Bikol and Tsonga. However, the full potential of the project could not be achieved since performance quickly became a blocker.

Neural Machine Translation models, based on machine learning approaches, have high performance requirements. Running them with minimally acceptable performance requires hardware acceleration using GPUs (graphics processing units). GPUs, while originally used for accelerating 3D graphic computation, became very useful for other data-intensive fields such as machine learning.

The need for GPUs became a problem when running models such as NLLB-200 and OPUS because the particular GPU architecture required for those models relied on proprietary drivers, and this was not acceptable for the maintainers of the Wikimedia infrastructure.

In 2021, before the No Language Left Behind (NLLB) project and their translation model NLLB-200 were publicly announced in 2022, the research team working in the project connected with the Wikimedia Foundation Language team.

The focus of their project to provide an open source model to support underserved languages was very relevant for the Wikimedia Foundation Language team. However, based on the previous unsuccessful attempt to integrate OPUS models, we were upfront about the limitations we had to run these types of models in the Wikimedia Foundation infrastructure.

The research team at Meta agreed to run the service to facilitate the integration in Content Translation as an external service for a period of a year. In this way we could evaluate how the model worked for a set of pilot wikis. Initially for 6 languages (Igbo, Icelandic, Luganda, Occitan, Chinese, and Zulu).

After the initial integration in Content Translation, 17 additional languages were supported in Content Translation using NLLB-200, reaching a total of 23 languages. Usage data of the different translation services was analyzed for the initial period and after the addition of new languages. The reports were showing very promising data. NLLB-200 was the translation service which required less amount of edits by translators and resulted in less articles being deleted:

“Overall across all languages, NLLB-200 currently has the lowest percent of articles created with content translation that are deleted (0.13%) compared to all other MT services available, while it has the highest percent of translations modified under 10%, indicating that the modifications rates for this machine translations service are a signal of good machine translation quality.”

The recent developments of OpenNMT Ctranslate2 library resolved this blocker. Ctranslate2 is a library to optimize machine learning models for better performance. These performance improvements make it possible for models to run in servers without GPU acceleration, providing an acceptable performance. This process requires a one-time conversion of the models to obtain an optimized version. MinT is running the optimized version of NLLB-200, OPUS, IndicTrans2, and Softcatalà translation models using Ctranslate2 to avoid the need for GPU acceleration. We achieved translation performance similar to GPU by using the optimized models with just CPUs.

Next steps

The initial focus has been to enable MinT in Content translation for languages which had no machine translation support before. In this way, Wikipedia editors in those communities can benefit from machine translation for the first time.

MinT can be helpful in many contexts, and we plan to keep expanding machine translation support in different ways:

- More languages. Enabling more of the languages supported by the current models. Even those languages covered by other translation services can benefit from a service such as MinT. Community feedback will help us to determine which options to make available by default.

- More projects. Having an open machine translation service such as MinT enables the integration and use of machine translation in a diversity of projects beyond Content Translation. Machine translation can be a useful service for many other projects by the Wikimedia communities and for the broader internet.

- More models. MinT was designed to support multiple translation models, and we think it will be an interesting platform for organizations working in the Machine Learning space to apply their open models to help language communities in a real context.

The Content Translation tool exemplifies how an open model can empower individuals and organizations to collaborate across languages and technologies to build something that benefits everyone while remaining aligned with Wikimedia’s standards in technology and design.

We continue to grow and strengthen these tools and are excited to keep doing so in collaboration with the global community of Wikipedia editors and partner organizations.

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation