This post is part of a series of Safety and Advocacy posts by the Community Resilience and Sustainability team. This post was authored by staff from the Trust and Safety Disinformation team, whose training module on disinformation you can find on learn.wiki.

Introduction and Definition

Building the largest online encyclopaedia and filling it with notable information from the world’s knowledge is a massive undertaking. For over two decades, contributors have curated content, created and shaped policies, and crafted the world’s knowledge in an accessible format for readers.

More recently, disinformation is a growing problem around the world, and Wikimedia projects are not immune to disinformation campaigns. So what is disinformation? Why is disinformation different on Wikimedia projects? And what can Wikipedia editors do to tackle this problem?

First things first, let’s define our terms (as with many buzzwords, the term itself can be incredibly difficult to define clearly). Although many different definitions exist, the most common ones contain three key elements:

- Disinformation contains false or misleading information.

- Disinformation is shared with the intent to deceive.

- Disinformation has the potential to harm readers.

Without any of these three key elements, the information shared cannot be defined as disinformation, but it may still be a form of information disorder, such as misinformation or malinformation. Misinformation is false or misleading information, but it is shared without the intent to deceive. Malinformation may be shared out of context, but the information itself may be correct. Doxxing is a form of malinformation (which you can read more about and suggestions of how to protect yourself on Diff).

Disinformation is different here

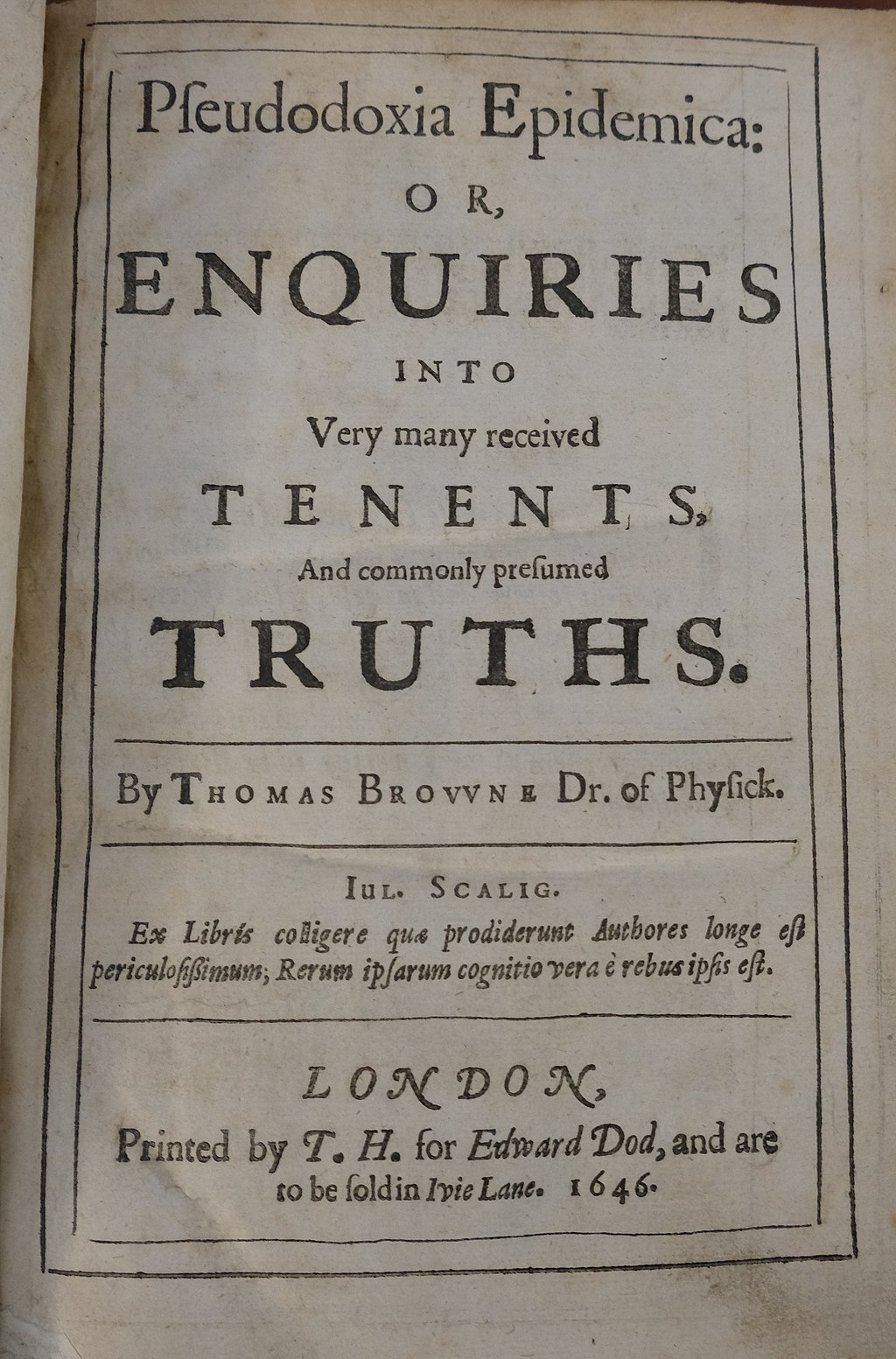

Disinformation as an academic discipline emerged in the immediate aftermath of the 2016 US Presidential Election. However, it is important to note that it is not a new phenomenon. Misleading information campaigns have been used as part of pandemics, wars, and elections for centuries. And where disinformation reigns, anti-disinformation work begins. This work, too, has taken place for many a year. Take, for example, the case of Thomas Browne, author of the 1646 treatise Pseudodoxia Epidemica, or Enquiries into very many received tenents and commonly presumed truths. Browne sought to refute some of the false and misleading claims which made their way into everyday life.

But this does not mean that all anti-disinformation work looks alike. The Wikimedia ecosystem retains a number of factors which make anti-disinformation work unique. These include:

Community – there is a vested interest in the Wikimedia movement to stop disinformation from manifesting on our projects.

Platform – rather than thousands of opinions on a single topic, Wikipedia aims to achieve community consensus based on the best verifiable sources on one page per topic.

Methods – rather than using technology, like bot accounts, disinformation spreaders are forced to engage with wiki bureaucracy in order to maintain their misleading content.

Guidelines – verifiability, neutral point of view (NPOV) and no original research.

In short, disinformation works differently in the Wikimedia ecosystem than it does elsewhere, such as on social media. As a result, our solutions to the problems that disinformation brings need to be tailored to our specific needs. So what do we do to challenge disinformation threats, and how can we do it better?

The Wikimedia Model for Challenging Disinformation

Local Administrators

The first port of call for challenging disinformation should be the administrators of your local project. They are best placed to understand the context and history of the pages under threat, and can best direct community resources to resolve content issues.

Wiki Initiatives

There are a range of different initiatives across the movement which deal with disinformation in one way or another, including: WikiCred, a project aimed at training media professionals how to use Wikipedia; the Knowledge Integrity Risk Observatory; and various projects led by the Foundation’s Moderator Tools team. The Foundation’s Global Advocacy team is working on a map of some of these initiatives, to which you can contribute.

Trust and Safety Disinformation Team

Should the issue include serious behavioural issues which local administrators are unable to resolve, the Wikimedia Foundation has a Trust and Safety Disinformation team. This team is available to help with a range of issues:

- In cases which involve serious threats of harm, they can be contacted via emergency@wikimedia.org;

- In cases that cannot be adjudicated by local community mechanisms, they can be reached via ca@wikimedia.org;

- The Wikimedia Foundation’s Trust and Safety Disinformation team also runs the Disinformation Response Taskforce (DRT) around elections at high risk of disinformation attacks. In cases where the community is concerned about disinformation efforts connected to prominent elections or geopolitical events, they can be contacted via drt@wikimedia.org.

Ahead of a bumper year of elections in 2024, the Trust and Safety Disinformation team has begun preparing for several Disinformation Response Taskforces (DRTs), designed to support Wikimedia communities to maintain knowledge integrity during high-risk events.

The Trust and Safety Disinformation team has also recently published a training module about anti-disinformation work, which you can find on learn.wiki. If you would like to establish your own personal toolkit for challenging disinformation in your wiki community, please check it out!

Members of the Disinformation team will be available during the next Community Resilience and Sustainability Conversation Hour in November. Join, ask your questions, and learn more.

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation