A study published in February in PLOS ONE by researchers at the Oxford Internet Institute examined the way bots behave on Wikipedia. The researchers concluded that “bots on Wikipedia behave and interact […] unpredictably and […] inefficiently” and “disagreements likely arise from the bottom-up organization […] without a formal mechanism for coordination with other bot owners”.

The authors assume that a “bot fight” takes place when bots revert actions by other bots, and the media soon picked up on this trope, as you can see by some of the headlines:

- Study reveals bot-on-bot editing wars raging on Wikipedia’s pages (The Guardian)

- Automated Wikipedia Edit-Bots Have Been Fighting Each Other For A Decade (Huffington Post)

- Investigation Reveals That Wikipedia’s Bots Are in a Silent, Never-Ending War With Each Other (Science Alert)

- Battle of the Bots: ‘Main Reason for Conflicts is Lack of Central Supervision’ (Sputnik)

- The BBC even had a special segment on Newsnight, where they claimed that “Petty disputes [between bots] escalate into all out wars that can last years.” (BBC, YouTube video)

- Internet Bots Fight Each Other Because They’re All Too Human (Wired)

These headlines assume that bots reverting edits made by other bots is a conflict or a ‘fight’—where bots are getting into edit wars with each other over the content of articles. This assumption sounds reasonable, but it is not the case for an overwhelming majority of bot-bot reverts.

In this post, I’ll explain how these bots work on Wikipedia and push back on the notion that the majority of bots that “revert other bots” are “fighting.” I’ll also detail a few examples where bot-bot fights did occur, but were limited in their scope because of the strong bot governance which exists on the platform. For more details about this rebuttal research study, see our paper.

How Bots Work

Bots currently perform a variety of automated tasks on Wikipedia to help the encyclopedia run smoothly. There are currently over 2,100 bots in use on the English Wikipedia platform, and they do everything from leave messages for users to revert vandalism when it occurs.

In many instances where bots revert the changes made by other bots, they are not in conflict with one another. Instead, they’re collaborating to maintain links between wikis or keep redirects clean, often because human Wikipedians have changed the content of an article.

Not Really a Fight

Stuart Geiger, an ethnographer and post-doctoral scholar at the UC-Berkeley Institute for Data Science, has been studying and publishing about the governance of bots in Wikipedia for many years. We have been working to replicate the PLOS ONE study from many angles (see our paper), and we are looking into cases the authors identified as bot-bot conflict. When we dive deep into these cases, we are finding that only a tiny portion of bot-bot reverts are actually conflict.

“I think it is important to distinguish between two kinds of conflict around bots,” says Dr. Geiger. “The kind of conflict people imagined when the Even Good Bots Fight paper was published is when bots get into edit wars with each other over the content of articles, because they are programmed with opposing directives. This certainly happens, but it is relatively rare in Wikipedia, and it typically gets noticed and fixed pretty quickly. The Bot Approvals Groups and the bot policies generally do a good job at keeping bot developers in communication with each other and the broader Wikipedia/Wikimedia community.”

“The second kind of bot conflict, which I find far more interesting, is when human Wikipedians debate with each other about what bots ought to do,” Geiger continues. “These can get as contentious as any kind of conflict in Wikipedia, but these debates are typically just that: debates on talk pages. It is ultimately a good thing that Wikipedians actively debate what kind of automation they want in Wikipedia. Every major user-generated content platform is using automation behind the scenes, but Wikipedia is one of the only platforms where these debates and decisions are made publicly, backed by community-authored policies and guidelines. And in the rare cases when bot developers have gone beyond their scope of approval from the Bot Approval Group, the community tends to notice pretty quickly.”

The case of the Addbot-pocalypse

In March of 2013, a bot named Addbot reverted 146,614 contributions other bots had made to English Wikipedia. The bot was designed to remove the old style of “interlanguage links” in order to pave the way for a new way of capturing the cross-language relationships between articles in Wikidata. For example, the article for Robot on English Wikipedia is linked to the article Roboter on German Wikipedia. Before the decision was made to move these links to Wikidata, dozens of other bots were used to keep the link graph up to date. But after the move to Wikidata’s central repository, this automated work and the links they created were no longer necessary. So Addbot removed all traces of interlanguage links from all 293 Wikipedia languages and paved the way for maintaining them in Wikidata.

On the surface, Addbot’s activities amount the single greatest bot-on-bot revert event in the history of Wikipedia. Yet, this was not an example of on-wiki bots fighting with one another. This was an example of bots collaborating with one another to maintain the encyclopedia. Reverts in this instance were not an ongoing “bot war” with bots jockeying for the last edit; it was simply a case of one bot moving links that were no longer needed.

—————

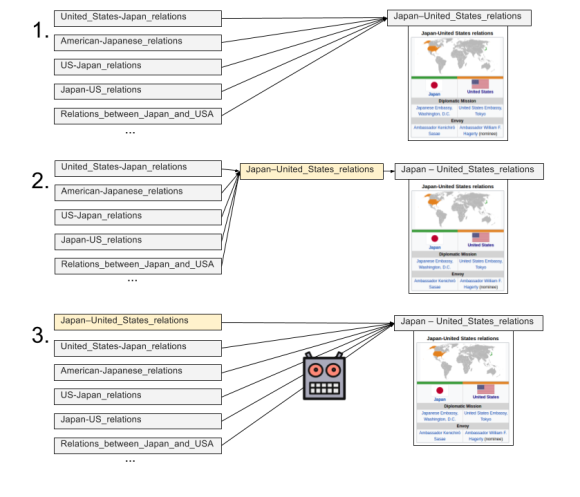

A common example of non-conflict: Double-redirect fixing bots. In June of 2008, The Transhumanist changed the “-” in the title “Japan-United States relations” to a “–” (an endash) — in line with Wikipedia Manual of Style. This set up the initial redirect that made sure that links to the old title would still find the article. A year later, a different editor renamed the article to “Japan – United States relations” (adding spaces around the endash while citing the relevant portion of the style guide). This created a double-redirect which broke navigation from pages that linked to the old title across Wikipedia. So Xqbot got to work and cleaned up the double-redirects (as depicted in the image above). Seven months later a third Wikipedian renamed the article again — this time removing the spaces around the endash based on a change in the style guideline. But this time, DarknessBot detected the double redirect and cleaned it up by removing the spaces in the redirect links as well. From a superficial point of view, DarknessBot reverted Xqbot. But when we look at the whole story, it’s clear that this isn’t conflict, but rather an example of bots collaborating to keep the redirect graph clean.

A common example of non-conflict: Double-redirect fixing bots. In June of 2008, The Transhumanist changed the “-” in the title “Japan-United States relations” to a “–” (an endash) — in line with Wikipedia Manual of Style. This set up the initial redirect that made sure that links to the old title would still find the article. A year later, a different editor renamed the article to “Japan – United States relations” (adding spaces around the endash while citing the relevant portion of the style guide). This created a double-redirect which broke navigation from pages that linked to the old title across Wikipedia. So Xqbot got to work and cleaned up the double-redirects (as depicted in the image above). Seven months later a third Wikipedian renamed the article again — this time removing the spaces around the endash based on a change in the style guideline. But this time, DarknessBot detected the double redirect and cleaned it up by removing the spaces in the redirect links as well. From a superficial point of view, DarknessBot reverted Xqbot. But when we look at the whole story, it’s clear that this isn’t conflict, but rather an example of bots collaborating to keep the redirect graph clean.

—————

In fact, Addbot’s reversion of hundreds of thousands of other bot edits was a productive activity and the outcome of the well-oiled bot governance system on Wikipedia. Addbot is one of the best examples of a well-coordinated bot operation in an open online space:

- The bot’s source code and operational status are well-documented

- It is operated in line with the various bot policies that different language versions can set for themselves

- It is run by a known maintainer, and

- It is performing a massive task that would be a waste of human attention.

We contacted Adam Shorland, the developer of Addbot, to talk about his experiences creating a bot that collaborated with other bots on the platform.

Tell me a little bit about your experience as a bot maintainer. How many bots have you built and what do they do?

So, I have only really ever built one bot, and that is addbot. It was built in php and has been through several iterations.

Although I have only ever run one bot it has had many tasks over the years, and the biggest of those was the removal of interwiki links from Wikimedia projects after the introduction of sitelinks provided by Wikidata.

The code for the bots first started using a single simple class for interacting will Mediawiki, I believe created by Cluebot creator cobi. And since then I have a still work in progress set of libraries called addwiki also in PHP which I use for the newer bot tasks.

Using PHP for interacting with mediawiki makes sense really, especially as Mediawiki is written in php and is slowly being split into libraries. Wikibase (that runs Wikidata) is already there and I had to write minimal code to interact with it, even with its complex data model

I should probably also mention I am a BAG (Bot Approvals Group) member on the English Wikipedia, however I haven’t been active in a while.

How did you end up taking on the task of migrating interlanguage links to Wikidata?

So, one thing to note here is that addbot did not move interwiki links to Wikidata, it simply removed them from other wiki projects when Wikidata was already providing them.

The removal task alone was much simpler than the move task, this allowed for extra speed and amazing accuracy in edits.

I don’t remember exactly how I started the task. I remember it not being long after I first found out about wikidata. Someone that I had worked with before legoktm was also partially working on the task at the beginning.

As I looked around I didn’t see anyone willing to complete the task as a whole, across all wikis, and do it quickly, so I decided to jump in.

Having one bot / one version of code to do all sites also made sense as the task, no matter the wiki project, is essentially exactly the same. Having one script allowed the code to be refined using all of the feedback from the first few projects that it ran on.

How does it feel to have the most conflicty bot — according to the definitions used in “Even Good Bots Fight”? Did you see Addbot’s actions as conflicty?

It’s quite interesting, as the bot did exactly what it needed to do, and exactly what the community had agreed to, no conflict on that level. Although even a few weeks into the removals users were still turning up on the addbot talk page asking what it was doing removing links, but there was a lovely FAQ page for just that reason!

As for conflicts in edits, toward the start of the removals some edit conflicts and small wars did actually happen with old interwiki bots that people had left running. In these cases addbot would go along and remove interwiki links and old interwiki bots would come along and re-add them, however all communities ended up blocking these old bots to make way for the new way of Wikidata sitelinks.

Other than this early case I wouldn’t really say addbot was conflicty at all, although I can see how people looking at the data in certain ways could come to this conclusion.

What’s your experience with Wikipedia/Wikidata’s bot governance (e.g. BAG and bot policies)? Does it seem to you that they are effective or should there be some change to how bot activities are managed?

So, as said above I am a member of the BAG on enwiki, and I was also an admin on Wikidata and able to approve bots, however I am now a very inactive BAG member and no longer an admin on Wikidata, also due to inactivity, I simply spend too much time working and programming now.

I feel that some level of control and checking definitely makes sense, I remember my first bot request, which I now appreciate was a bad idea, and it was declined by BAG. However if BAG was not there I would have likely ended up running it, at least for a bit. So they are definitely effective.

BAG on enwiki is probably the most thorough process, but probably also one of the slowest, other than wikis with very small numbers of users to approve bots.

If I had to pick a new process for bot approvals I wouldn’t really know where to start. But in my opinion, with bots, if you follow the basic guidelines about bots even without any approval you’re not going to cause any / much damage before someone notices you may be doing something wrong or bad in some way.

Again this is probably different on smaller wikis and I imagine a new user coming along with a bot could probably stir up the whole site before anyone noticed.

The researchers who wrote the Oxford paper called for a supervising body to manage bot activities (like WP:BAG) and a set of policies to govern their behavior (like WP:Bot_policy) — both of which exist. As Adam says, cases of true bot-bot conflict have been short lived and have caused little damage because these governance mechanisms have been largely effective despite their voluntary, distributed nature.

I don’t mean to paint a picture of perfection in Wikipedia. After all, bot fights do happen (see a list maintained by Wikipedians), but they are rare and short-lived due to the effectiveness of Wikipedia’s bot governance mechanisms. For example:

- In November of 2010, SmackBot and Yobot had a slow-motion edit war (a few edits over the course of week) disagreeing about some white-space on the article about Human hair growth.

- In September 2009, RFC bot edit-warred with itself for 6 hours about whether or not to include a “moveheader” template on the discussion pages for the articles about White-bellied Parrot and Nanday Parakeet.

The researchers who published the Oxford paper didn’t tell this story because they categorized reverts across Wikipedia as conflict. But the more interesting story is this: Wikipedia is a key example of how to manage bot activities effectively. A few, short-lived bot edit wars and effective governance mechanisms may not be as exciting as the idea that robots are duking it out in Wikipedia. However, from my perspective as a researcher of socio-technical systems like Wikipedia, the real nature of bot fights involves a fascinating discussion of generally effective, distributed governance in a relevant field site and such discussions push the science of socio-technical systems forward. It’s hard to make headlines with “Bot fights in Wikipedia found to be rare and short-lived”.

Aaron Halfaker, Principal Research Scientist

Wikimedia Foundation

Infographic by Aaron Halfaker, CC BY-SA 4.0. This blog post has been updated with a link to Geiger and Halfaker’s paper, “Operationalizing conflict and cooperation between automated software agents in Wikipedia: A replication and expansion of ‘Even Good Bots Fight’.”

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation