Vol: 3 • Issue: 11 • November 2013 [contribute] [archives] ![]()

Reciprocity and reputation motivate contributions to Wikipedia; indigenous knowledge and “cultural imperialism”; how PR people see Wikipedia

With contributions by: Piotr Konieczny, Brian Keegan, Nicolas Jullien, Amir E. Aharoni, Henrique Andrade, Tilman Bayer, Daniel Mietchen, Giovanni Luca Ciampaglia, Dario Taraborelli and Aaron Halfaker

Contents

- 1 What drives people to contribute to Wikipedia? Experiment suggests reciprocity and social image motivations

- 2 Does “cultural imperialism” prevent the incorporation of indigenous knowledge on Wikipedia?

- 3 How PR professionals see Wikipedia: Trends from second US survey

- 4 Report from the inaugural L2 Wiki Research Hackathon

- 5 Briefly

- 5.1 “Iron Law of Oligarchy” (1911) confirmed on Wikia wikis

- 5.2 Twitter activity leads Wikipedia activity by an hour

- 5.3 “Google loves Wikipedia”

- 5.4 New article assessment algorithm scores quality of editors, too

- 5.5 “How do metrics of link analysis correlate to quality, relevance and popularity in Wikipedia?”

- 5.6 Usage of images and sounds is related to the quality of Wikipedia articles

- 5.7 Student perception of Wikipedia’s credibility is significantly influenced by their professors’ opinion

- 5.8 Non-participation of female students on Wikipedia influenced by school, peers and lack of community awareness

- 5.9 Gender gap coverage in media and blogs

- 5.10 German Wikipedia articles become static while English ones continue to develop

- 5.11 New sockpuppet corpus

- 5.12 Workshop on “User behavior and content generation on Wikipedia”

- 6 References

Wikipedia works on the efforts of unpaid volunteers who choose to donate their time to advance the cause of free knowledge. This phenomenon, as trivial as it may sound to those acquainted with Wikipedia inner workings, has always puzzled economists and social scientists alike, in that standard Economic theory would not predict that such enterprises (and any other community of peer production, for example open source software) would thrive without any form of remuneration. The flip-side of direct remuneration — passion, enthusiasm, belief in free knowledge, in short, intrinsic motivations — could not alone (at least as standard theory goes) convincingly explain such prolonged efforts, given essentially away for free.

Early on the dawn of the Open Source/Libre software movement, some economists noted that successfully contributing to high-profile projects like Linux or Apache may translate in a strong résumé for a software developer, and proposed, as a way to reconcile traditional economic theory with reality, that whereas other forms of extrinsic motivation are available, sustained contribution to a peer production system could happen. But what about Wikipedia? The career incentive is largely absent in the case of the Free Encyclopedia, and is it really the case that intrinsic motivation such as pure altruism cannot be really behind the prolonged efforts of its contributors?

To understand this, a group of researchers at Sciences Po, Harvard Law School, and University of Strasbourg (among others) designed a series of online experiments with the intent of measuring social preferences, and administered them to a group of volunteer Wikipedia editors to understand whether contribution to Wikipedia can be explained by any of the main hypotheses that economists have thus far formulated regarding contribution to public goods.[1][2] The researchers considered three hypotheses, two for intrinsic and one for extrinsic forms of motivation: pure altruism, reciprocity, and social image motives.

In more detail, the researchers asked a number of Wikipedia editors and contributors (all with a registered account) to participate in a series of experimental games specifically designed to measure the extent to which people behave according to one or more of the above social preferences — for example by either free-riding or contributing to the common pool in a public goods game. In addition to this, as a proxy measure for the “social image” hypothesis, they checked whether participants ever received a barnstar on their talk pages and whether they ever chose to display any of these on their user page (coding these individuals as “social signallers”). Finally, they matched each participant with their history of contribution of the participants, and sought to understand which of these measures can explain their edit counts.

The results suggest that reciprocity seems to be the driver of contribution for less experienced editors, whereas reputation (social image) seems to better explain the activity of the more seasoned editors, though, as the authors acknowledge, the goodness of fit of the regression estimates is not great. The study was at the center of a heated debate within the community about the usage of site-wide banners for recruitment purposes. On December 3, one of the authors gave a presentation about the results at Harvard, which is available online as an audio and video recording. According to the Harvard Crimson, he remarked “that the study is still in progress and more data needs to be collected”. The results are so far available in the form of a conference paper and as an unpublished working paper.

Does “cultural imperialism” prevent the incorporation of indigenous knowledge on Wikipedia?

A draft chapter[3] of a book to be published in early 2014 presents the issue of incorporating into Wikipedia “Indigenous Knowledge” (IK) – human knowledge that is not a part of the codified and peer-reviewed Western-style publishing, but is rather transmitted orally in other parts of the world. The problem is not new; perhaps most notably, it was described in the 2011 documentary “People are Knowledge“, which was produced by Indian Wikimedian Achal Prabhala and funded by the Wikimedia Foundation as a fellowship project. The general problem is that Wikipedia relies on written reliable sources for verifying its materials. This article describes Wikipedia’s policies and editing practices that are relevant to the problem of incorporating Indigenous Knowledge. In describing these it makes a rather problematic claim — that “the ‘currency’ of Wikipedia is edit count”. Many Wikipedia editors will find this claim wrong and even offensive, as quality, rather than quantity, counts for an editor’s reputation, and in any case the content is more important than the creator.

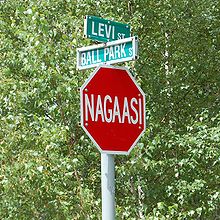

The article presents several valuable and thought-provoking examples of how the rigid referencing rules of the English Wikipedia go to extremes and do not necessarily reach the goals of ensuring notability, verifiability and reliability. It notes, for example, that because many of Wikipedia’s editors are laymen who want to work quickly and fill the gaps that interest them, they are likely to cite sources partially without reading them completely and deeply — thus undermining the sources’ reliability. Another example is Gi-Dee-Thlo-Ah-Ee, a Cherokee woman who was the subject of a book that was included in the Library of Congress. An article about her was deleted from the English Wikipedia, the main reason being that the book was not deemed an independent reliable source, because it was published by the Cherokee Nation. The case of the article Makmende (“the first Kenyan Internet meme”) is also cited, although the validity of this example has been questioned (Signpost coverage: “Essay examines systemic bias toward African topics, using disputed deletion example“).

The aforementioned work on oral citations by Achal Prabhala, as well as Prabhala’s practical attempts to challenge the English Wikipedia’s citation policy is the subject of a large part of this article. It shows that until now Prabhala’s attempts have mostly failed, because the editor community found his citation practices unacceptable. The article analyzes the typical responses of the people who are opposed to oral citations and shows some problems with them. However, it doesn’t yet give any useful resolution to the issue and labels the opposition to oral citations as “cultural imperialism”.

Despite its shortcomings, this article is a good presentation of the issues at hand, as well as of their importance, and it is a good summary of the work done in the field until now.

The second author, Maja van der Velden, had published another article on the same subject some months ago,[4] also referring to Achal Prabhala’s oral citation project and comparing it to two other initiatives, Text, Audio, Movies, and Images (TAMI), and the Brian Deer Classification (BDC). TAMI is a database on Australian Aboriginal culture, BDC a library classification system in use at the Xwi7xwa Library, which specializes in Canadian Aboriginal culture. Indigenous communities were involved in the design of both, resulting in some marked differences from Western (and Wikipedia) design habits, e.g. a flat hierarchy of only four categories in TAMI (those represented in the acronym), or the lack of a “Canada” class in BDC (“United States” exists, at the same level as “Maoris”).

She criticizes the merger of the indigenous knowledge entry on the English Wikipedia into traditional knowledge on principle grounds, adds that the merger did not actually merge content from the former into the latter, and takes issue with the focus of the traditional knowledge entry being so much on intellectual property, to the point that she added a screenshot of the article’s table of contents (much like the one pictured here).

After outlining how matters of design are handled on Wikipedia, van der Velden discusses whether fulfilling its mission of providing access to the sum of human knowledge might benefit from decentralizing design decisions, which brings her to the regularly recurring ideas of decentralizing Wikipedia and to a discussion of interwiki links that manages not to mention Wikidata.

Overall, the article is an interesting and in parts thought-provoking contribution to the activities around increasing diversity within the Wikimedia community (see, for instance, the Wikimedia Diversity Conference, held in Berlin earlier this month). It would have benefited from a more detailed description of TAMI and BDC and from suggestions as to how their respective community engagement experiences could be transferred and adapted to cross-cultural collaboration in Wikimedia projects.

How PR professionals see Wikipedia: Trends from second US survey

In light of the recent increase in for-hire editing on Wikipedia, often carried out by PR professionals, another timely study has been released,[5] a survey among PR professionals, as a followup to one covered in the April 2012 edition of this research report (“Wikipedia in the eyes of PR professionals“). The surveys examine how familiar the PR professionals (working not only for for-profit organizations, but also for non-profits, educational institutions, government institutions, and others) are with Wikipedia rules. 74% of respondents noted that their institution had a Wikipedia article, a significant (5%) increase over the 2012 survey, though over 50% of the PR professionals do not monitor those articles more often than on a quarterly basis. The study confirms that there is a steady but slow increase in PR professionals who have made direct edits to Wikipedia; 40% of the 2013 survey respondents had engaged with Wikipedia through editing (with about a quarter of the respondents editing talk pages, and the remainder directly editing the main space content), compared to 35% of the 2012 survey respondents. Over 60% agree that “editing Wikipedia for a client or company is a common practice”, a slight but statistically significant decrease from 2012. While “posing as someone else to make changes in Wikipedia” is not seen as a common practice, it is nonetheless supported by ~15% of respondents in the USA and almost 30% elsewhere (though the latter number should be taken tentatively, as 97% of the survey respondents came from the US).

At the same time, approximately two thirds of the respondents do not know of or understand Wikipedia rules on COI/PR and related topics (defined in this study as Wales’ 2012 “Bright Line” policy proposal, linked to his comment in a Corporate Representatives for Ethical Wikipedia Engagement (CREWE) from January 10, 2012, 5:56 am (accessible here – Facebook login required). Of those who had experience editing Wikipedia directly, thus breaking the rule, over a third (36%) did so knowing about it, thus knowingly violating the site’s policy.

The significant breadth of ignorance about Wikipedia rules reinforces the point that even a decade after Wikipedia’s creation, most of its users do not even realize that it is a project “anyone can edit”, much less what it means: 71% respondents replied that they simply “don’t know” “How Wikipedia articles about their clients or companies are started”, which presumably indicates that they do not understand the basic function and capabilities of the article history function. A majority of other respondents (24% total) admit to writing it themselves; 3% hired a PR firm specializing in this task, 1% hired a “Wikipedia firm” (a concept unfortunately not defined in the article), and only 2% note that they “made a request through Request Article Page”). When it comes to existing articles, only 21% of the respondents wait for the public; the vast majority of the rest make edits themselves, with 5% outsourcing this to a specialized PR or “Wikipedia firm”.

Respondents who had directly edited Wikipedia for their company or client said their edits typically “stick” most of the time. Over three quarters noted that their changes stick half the time or more often; only 8% said they never stick, always being reverted. This raises the question about the efficiency of Wikipedia COI-detection practices, as well as of their desirability (are we not reverting those changes because we don’t realize they are COI-based, or are they reviewed and left alone as net-positive edits?).

60% of the respondents note that the articles about their clients or companies have factual errors they would like to correct; many observed that potentially reputation-harming errors last for many months, or even years. This statistic poses an interesting question about Wikipedia responsibility to the world: by denying PR people the ability to correct such errors, aren’t we hurting our own mission?

The majority of respondents were not satisfied with existing Wikipedia rules, feeling that the community treats PR professionals unfairly, denying them equal rights in participation; even out of the respondents who tried to follow Wikipedia policies and who raised concerns on the article’s talk page rather than directly editing them, 10% noted that they had to wait weeks to get any response, and 13% said they never received a response.

Regarding to the new editors’ experience, it is also interesting to note that only a quarter of PR professionals felt that making edits was easy; the majority complained that editing Wikipedia is time-consuming or even “nearly impossible”.

Report from the inaugural L2 Wiki Research Hackathon

On November 9, 2013, a group of Wikimedia Foundation researchers, academics and community members hosted the inaugural Labs2 Wiki Research Hackathon: the first in a series of global events meant to “facilitate problem solving, discovery and innovation with the use of open data and open source tools” (read the full announcement from the Wikimedia Blog). The event brought together attendees from local meetups in Oxford, Mannheim, Chicago, Minneapolis, Seattle and San Francisco and a number of remote participants. Participants began the groundwork around new projects studying Wikipedia including a study of newcomer retention focused on females, explorations of using Wikipedia as a multilingual corpus, an examination the effectiveness of helpdesks on Wikipedia and several others. A series of presentations were given and streamed during the event, including:

- a brief tutorial by Dario Taraborelli on how to access Wikimedia database replicas via Tool Labs.

- a presentation by Stephen LaPorte on Wikimedia’s RecentChanges IRC feeds (slides).

- a presentation by Max Klein on retrieving and manipulating structured data from Wikidata (video and companion blog post).

- a demo by Mahmoud Hashemi of the wapiti library – a MediaWiki API wrapper written in Python “to simplify data retrieval from the Wikipedia API without worrying about query limits, continue strings, or formatting”.

- a presentation by Haitham Shammaa on Wikipedia community graph visualization using Gephi (video and research page on Meta).

The organizers are planning to host a new hackathon in Spring 2014 and are actively seeking volunteers to host local and virtual meetups. (wrh@wikimedia.org)

Briefly

“Iron Law of Oligarchy” (1911) confirmed on Wikia wikis

An empirical study[6] of 683 Wikia wikis (rather than WMF projects — but with significant implication for them as well) found support for the claims that the iron law of oligarchy holds in wikis; i.e. that the wiki‘s transparent and egalitarian model does not prevent the most active contributors from obtaining significant and disproportionate control over those projects. In particular, the study found that as wiki communities grow 1) they are less likely to add new administrators; 2) the number of edits made by administrators to administrative “project” pages will increase and 3) the number of edits made by experienced contributors that are reverted by administrators also grows. The authors also note that while there are some interesting exceptions to this rule, proving that wikis can, on occasion, function as egalitarian, democratic public spaces, on average “as wikis become larger and more complex, a small group – present at the beginning – will restrict entry into positions of formal authority in the community and account for more administrative activity while using their authority to restrict contributions from experienced community members”.

Twitter activity leads Wikipedia activity by an hour

Journalists have long speculated that after a breaking news event, users follow a “Twitter-to-Google-to-Wikipedia” path to seek information.[supp 1] Researchers at Ca’ Foscari University of Venice and the Scuola Normale Superiore di Pisa published a paper at the DUMBMOD workshop at CIKM 2013 comparing the trending named entities on Twitter to pageview requests on Wikipedia.[7] The research uses entity linking to connect concepts extracted from 260 million tweets collected in November 2012. Analyzing the time series of mentions on Twitter with pageview requests on Wikipedia,[supp 2] the researchers found the cross-correlation from Twitter to Wikipedia peaked at -1 hour, indicating Twitter topics lead Wikipedia requests by 1 hour. However, entity resolution is difficult for some generic names which results in spurious correlations.

“Google loves Wikipedia”

Why? And how about other search engines? This is the question posed by a study[8] announced in a blog post titled “General or special favouritism? Wikipedia-Google relationship reexamined with Chinese Web data”, an updated version of research covered previously in this research report after it was presented at WikiSym 2013 (“It’s ‘search engines favor user-generated encyclopedias’, not ‘Google favors Wikipedia’“). Back then, only an abstract of the paper and an earlier blog post were available; now a draft paper can be accessed. Han-Teng Liao presents interesting data backing up his claim that neither Google nor Wikipedia are unique, rather we are seeing a more generic rule that “search engines favor user-generated encyclopedias”. His study’s valuable contribution, beside methodology, is the data from the Chinese Internet, though as he notes we need further research on “the cases of Russia (where Yandex dominates) and South Korea (where Naver and other dominate)”.

New article assessment algorithm scores quality of editors, too

A paper[9] presented at the recent Conference on Information and Knowledge Management describes a novel method for identifying the quality of article content based on the implicit review of editors who choose not to remove the content. The authors argue that similar strategies employed by systems like WikiTrust (content persistence) suffer from a critical bias — their algorithms assume that all content removals are equal. Suzuki & Yoshikawa’s method uses an iterative strategy to compute the mutual quality of editors and the articles that they edit together (similar to PageRank). To evaluate their predictions, they pit their algorithm vs. WikiTrust in predicting Version_1.0_Editorial_Team/Assessment ratings and human (grad student) judgement for a sample of articles, showing that their approach is more accurate in predicting both quality assessments. While they admit that this algorithm is computationally intensive, they also suggest that PageRank-like algorithms like theirs can easily be adapted to run on a MapReduce framework.

“How do metrics of link analysis correlate to quality, relevance and popularity in Wikipedia?”

A paper with this title[10] presented at the Brazilian symposium on Multimedia and the web examines how on the Portuguese Wikipedia, data from some Link analysis methods (indegree, outdegree, PageRank and its variants) correlates with assessments by the community on the quality and importance of articles, and also with access data from [1], using the Kendall tau rank correlation coefficient. Using data from Brazilian Internet domain sites and from the Portuguese Wikipedia, the study observed that link analysis results are more correlated to quality and popularity than to importance, and demonstrates that the outdegree method (which is based on the number of links that a page has) is the one more correlated to quality. It is pointed out that this method is moderately related to the length of the article, suggesting that length can be a criterion of the community quality evaluation. This article also showed that “simple metrics” (such as indegree and outdegree) can give results that are competitive with more complex metrics (such as PageRank), and reinforced that web links play a different role in Wikipedia pages than in the rest of the web.

Another paper from this conference (coauthored by the same authors with a fourth researcher)[11] likewise uses the Kendall tau rank correlation coefficient to look for relations between the quantity of media files in an article and its quality as evaluated by the community and by robots in the Portuguese Wikipedia. The paper separately compares articles with images and with sounds, and discovers a moderate correlation between the count of images and the evaluation made by both the community and robots and a low correlation when the articles ratings are compared to the number of sound files. These results cannot be considered conclusive because of the small number of articles in Portuguese Wikipedia with media content, and the experiment could be performed in a larger Wikipedia to find more solid conclusions.

Student perception of Wikipedia’s credibility is significantly influenced by their professors’ opinion

A poster[12] presented earlier this month at the ASIST 2013 conference of the Association for Information Science and Technology reports on the results of a 2011 web survey among US undergraduate students with 123 usable responses. In an earlier paper (review: “The featured article icon and other heuristics for students to judge article credibility“) the author had found that students perceive Wikipedia’s credibility as higher than their professors do, and that this judgment is influenced by their peers. Still, the new results show “that the more professors approved of Wikipedia, the more students used it for academic purposes. In addition, the more students perceived Wikipedia as credible, the more they used it for academic purposes”, indicating “that formal authority still influences students’ use of user-generated content (UGC) in their formal domain, academic work.”

Non-participation of female students on Wikipedia influenced by school, peers and lack of community awareness

Another poster[13] from the same conference describes a “preliminary study on non-contributing behaviors among college-aged Wikipedia users”, based on a qualitative analysis of in-depth interviews with 13 university students who had not contributed to Wikipedia before, 11 of them female. Participants had been using Wikipedia since middle school, and generally observed that school was “the most influential source” of information about negative sides of Wikipedia “such as reliability and credibility issues.” Another result is that respondents felt disconnected from the community behind Wikipedia, being “generally unaware of the presence and interaction between contributors and readers of Wikipedia”, attributed by one participant and the author to the fact that these students “did not observe the traces of how and who has changed information they were reading. This lack of visible interactions within the system affected them to ignore the role of participation among contributors or Wikipedia community, and failed to cultivate the culture of contribution among its users.” Some participants also cited a lack of confidence in their ability to contribute.

Overall, the author concludes that “the participants’ lack of intention for contributing to Wikipedia can be explained by [their own experience with Wikipedia and interactions with their social groups]. Negative attitudes toward Wikipedia prevailing in the past and new social environment (i.e., high school and college) influenced participants to shape negative images about Wikipedia. Participants did not have social groups that can trigger contributing behaviors, nor identified themselves as a potential member of Wikipedians. Rather, their descriptions of Wikipedians showed that female students’ cognitive distinction from Wikipedians was remarkable, which were related to their devaluations of Wikipedians or works of Wikipedians among their social groups.”

While cautioning about the small sample size of her study, the author suggests possible solutions: “Highlighting profiles and works of young contributors and exposing their contributing activities on social network sites (i.e., Facebook)” and that “interfaces should be changed to invite engagement of these casual users.”

Gender gap coverage in media and blogs

An article in the Journal of Communication Inquiry[14] studies how Wikipedia’s gender gap concern has been treated in the news, based on a qualitative analysis of 42 articles from US news media and blogs, and 1,336 comments from online readers. The authors argue that this discussion can be seen as an example of a “broader backlash against women, and particularly feminism” in the U.S. news media and blogs. Reading the article, it appears that the views of this gap in the media represent the variety of views about feminism, from the most concerned and documented to the most stupid and misogynist. However, the synthesis of these opinions and the discussions the authors had with some leaders at Wikipedia/Wikimedia Foundation (among them Sue Gardner) let them argue that this problem has not yet been properly addressed, because of its complexity, but also because of a clear political decision from the management of the project to tackle it.

German Wikipedia articles become static while English ones continue to develop

A conference paper titled “Identifying multilingual Wikipedia articles based on cross language similarity and activity”[15] examines the content and the development of articles on the same subject in English and in German using static dumps of Wikipedia. It is quite limited in scope — it only compares two languages out of almost 300 that Wikipedia supports — but it suggests several interesting analysis methodologies, among them using different machine translation using the freely-licensed Moses engine as well as Microsoft Bing Translate and Google Translate, and finds that Moses compares favorably in its usefulness for this kind of work. One of the article’s conclusions is that in general, articles in German tend to become static after initial development, while the English articles tend to continue to develop more over time.

New sockpuppet corpus

An arXiv preprint[16] announces “the first corpus available on real-world deceptive writing”: A set of talk page comments made by users suspected of sockpuppeting in 410 English Wikipedia sockpuppet investigation (SPI) cases (305 where the suspicion was confirmed by a checkuser or other investigating administrator, and 105 where it was not), plus a control set of 213 cases that were created artificially from editors not previously involved in SPI cases. The resulting dataset of 623 cases is being made available online under a Creative Commons license, which could help foster research by others.

In a longer paper published earlier this year (review: “Sockpuppet evidence from automated writing style analysis“), the authors had developed a machine learning based sockpuppet detection method and tested it on a smaller dataset of 77 cases. In their brief (4 pages) new preprint, they report that they successfully tested the method on the new, larger dataset, slightly improving the sockpuppet detection accuracy (F-measure) from 72% to 73%. (As one of the authors pointed out, in practice the method would be used alongside other evidence to yield a sufficiently certain proof of sockpuppetry.)

Workshop on “User behavior and content generation on Wikipedia”

A workshop with this title took place at the Centre for European Economic Research in Mannheim, Germany on November 8-9, jointly organized with the Leibniz-Institut für Wissensmedien based in Tübingen. The research presented took a broad range of approaches towards the study of Wikipedia, including the one on the “Iron Law of Oligarchy”[6] discussed above. These were complemented by several presentations from the Wikimedia end — on the multitude of interactions between the Wikimedian and research communities, on a wishlist of research that may be beneficial to the Wikimedia community, and on the recently finished research project RENDER, in which Wikimedia Deutschland was a partner. The discussions at the workshop centered around ways in which interaction between the research and Wikimedia communities could be broadened and rendered more mutually beneficial. This research report (published both as the Wikimedia research newsletter and as the Signpost’s Recent research section), the Research namespace on Meta-Wiki and Wikimedia Labs were mentioned in this regard. Also discussed was the issue that most papers in the field, along with tools and associated data, are not freely accessible, even though the openness of the Wikimedia ecosystem accounts for a significant portion of the motivation to study it.

References

- ↑ Yann Algan, Yochai Benkler, Mayo Fuster Morell, Jérôme Hergueux: Cooperation in a Peer Production Economy. Experimental Evidence from Wikipedia. Working paper, PDF.

- ↑ Yann Algan, Yochai Benkler, Mayo Fuster Morell, Jérôme Hergueux: Cooperation in a Peer Production Economy Experimental Evidence from Wikipedia. Aix-Marseille School of Economics, 12th journées Louis-André Gérard-Varet (June 2013) PDF

- ↑ Peter Gallert, Maja van der Velden: “Reliable Sources for Indigenous Knowledge: Dissecting Wikipedia’s Catch–22”. Draft, to be published in early 2014 as a chapter of the post-conference book for the Indigenous Knowledge Technology Conference (IKTC) 2011 (editors: N. Bidwell and H. Winschiers–Theophilus) PDF

- ↑ (2013) “Decentering Design: Wikipedia and Indigenous Knowledge”. International Journal of Human-Computer Interaction 29 (4): 308–316. doi:10.1080/10447318.2013.765768.

- ↑ Marcia W. DiStaso: Perceptions of Wikipedia by Public Relations Professionals: A Comparison of 2012 and 2013 Surveys. Public Relations Journal Vol. 7, No. 3, ISSN 1942-4604, Public Relations Society of America, 2013 PDF

- ↑ a b Aaron Shaw, Benjamin Mako Hill: Laboratories of Oligarchy? How The Iron Law Extends to Peer Production (draft paper) PDF

- ↑ (2013) “Twitter anticipates bursts of requests for Wikipedia articles“: 5–8. doi:10.1145/2513577.2538768.

- ↑ Han-Teng Liao (2013) How does localization influence online visibility of user-generated encyclopedias? A study on Chinese-language Search Engine Result Pages (SERPs). Draft paper. HTML

- ↑ Yu Suzuki, Masatoshi Yoshikawa: Assessing quality score of Wikipedia article using mutual evaluation of editors and texts. Proceedings of the 22nd ACM international Conference on information & knowledge management, Pages 1727-1732. ACM New York, NY, USA 2013. http://dx.doi.org/10.1145/2505515.2505610

- ↑ Raíza Hanada, Marco Cristo, Maria da Graça Campos Pimentel: How do metrics of link analysis correlate to quality, relevance and popularity in Wikipedia? Proceedings of the 19th Brazilian symposium on Multimedia and the web, Pages 105-112. ACM New York, NY, USA 2013 http://dx.doi.org/10.1145/2526188.2526198

- ↑ Marcelo Yuji Himoro, Raíza Hanada, Marco Cristo, Maria da Graça Campos Pimentel: An investigation of the relationship between the amount of extra-textual data and the quality of Wikipedia articles. Proceedings of the 19th Brazilian symposium on Multimedia and the web, Pages 333-336. ACM New York, NY, USA 2013 http://dx.doi.org/10.1145/2526188.2526218

- ↑ Sook Lim: Does Formal Authority Still Matter in the Age of Wisdom of Crowds?: Perceived Credibility, Peer and Professor Endorsement in Relation to College Students’ Wikipedia Use for Academic Purposes. ASIST 2013, November 1-6, 2013, Montreal, Quebec, Canada. PDF

- ↑ Jinyoung Kim: Wikipedians From Mars: Female Students’ Perceptions Toward Wikipedia. ASIST 2013, November 1-6, 2013, Montreal, Quebec, Canada. PDF

- ↑ (2013) “(Re)triggering Backlash: Responses to News About Wikipedia’s Gender Gap”. Journal of Communication Inquiry 37 (4): 284. doi:10.1177/0196859913505618.

- ↑ Khoi-Nguyen Tran, Peter Christen: Identifying multilingual Wikipedia articles based on cross language similarity and activity. Proceedings of the 22nd ACM international conference on information & knowledge management, Pages 1485-1488, ACM New York, NY, USA 2013, http://dx.doi.org/10.1145/2505515.2507825

- ↑ Thamar Solorio, Ragib Hasan, Mainul Mizan: Sockpuppet Detection in Wikipedia: A Corpus of Real-World Deceptive Writing for Linking Identities http://arxiv.org/abs/1310.6772

- Supplementary references:

- ↑ Seward, Zachary M. (13 August 2009). Here’s the AP Document We’ve Been Writing About. Nieman Lab, Harvard University. “…the new routine of Twitter-to-Google-to-Wikipedia contrasts sharply with the behavior of users in August of 1997…”

- ↑ Page view statistics for Wikimedia projects. Wikimedia Foundation. Retrieved on 25 November 2013.

Wikimedia Research Newsletter

Vol: 3 • Issue: 11 • November 2013

This newletter is brought to you by the Wikimedia Research Committee and The Signpost

Subscribe: ![]() Email

Email ![]()

![]() • [archives] [signpost edition] [contribute] [research index]

• [archives] [signpost edition] [contribute] [research index]

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation