The past few years have seen an explosion of journalism, scholarship, and advocacy around the topic of ethical AI. This attention reflects a growing recognition that technology companies often fail to put the needs of the people who use machine learning (or “AI”) technology, and of society as a whole, ahead of their business goals.

Much of the public conversation on the topic of ethical AI has revolved around general principles like fairness, transparency, and accountability. Articulating the principles that underlie ethical AI is an important step. But technology companies also need practical guidance on how to apply those principles when they develop products based on AI, so that they can identify major risks and make informed decisions.

What would a minimum viable process (MVP) for ethical AI product development look like at Wikimedia, given our strengths, weaknesses, mission, and values? How do we use AI to support knowledge equity, ensure the knowledge integrity, and help our movement thrive without undermining our values?

Towards a MVP for ethical AI

The Wikimedia Foundation’s Research team has begun to tackle these questions in a new white paper. Ethical & Human centered AI at Wikimedia takes the 2030 strategic direction as a starting point, building from the observation that “Developing and harnessing technology in socially equitable and constructive ways—and preventing unintended negative consequences—requires thoughtful leadership and technical vigilance.” The white paper was developed through an extensive literature review and consultation with subject matter experts, and builds off of other recent work by the Foundation’s Research and Audiences teams.

The white paper has two main components. First, it presents a set of risk scenarios—short vignettes that describe the release of a hypothetical AI-powered product, and some plausible consequences of that release on Wikimedia’s content, contributors, or readers. Second, it proposes a set of improvements we can make to the process we follow when we develop AI-powered products, and to the design of the products themselves, that will help us avoid the negative consequences described in the scenarios.

Identifying and addressing risks

The risk scenarios are intended to spur discussion among AI product stakeholders—product teams, research scientists, organizational decision-makers, and volunteer communities. Scenarios like these can be used in discussions around product planning, development, and evaluation to raise important questions. They can help us uncover assumptions that might otherwise be left unstated, and highlight tensions between immediate goals and foundational values. The goal is to help people grapple with these trade-offs and identify alternative approaches that minimize the risk of unintended consequences.

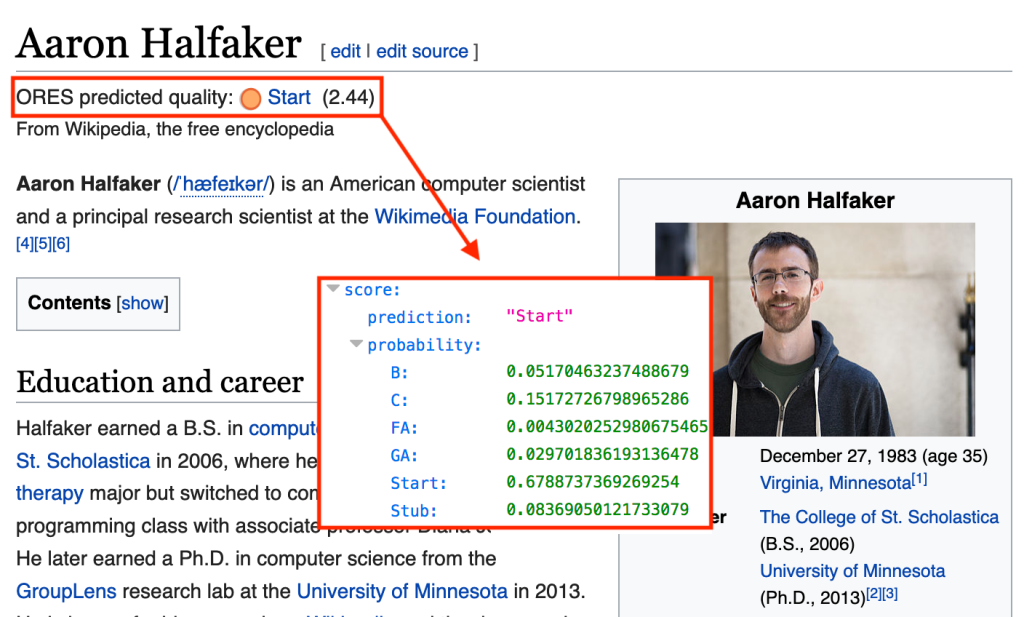

Each of the six risk scenarios address a complex ethical issue that AI products can make worse—like the risk of reinforcing systemic bias, discouraging diversity, and creating inequity in access to information. They also help uncover subtler issues—like the risk of disrupting community workflows or subverting editorial judgement when we automate processes that are currently performed by people. While the negative outcomes described in the risk scenarios are hypothetical, each one is based on a realistic Wikimedia-specific AI product use case.

The eight process improvement proposals described in the white paper lay out courses of action that Wikimedia can take when developing AI products. Following these recommendations can help researchers and product teams identify risks and prevent negative impacts, and ensure that we continue to get better building AI products over time.

Some of the proposals focus on improving our software development process for AI products. They describe steps we should take when we develop machine learning algorithms, assess potential product applications for those algorithms, deploy those products on Wikimedia websites, and evaluate success and failure.

Other proposals focus on the design of the AI technologies themselves, and the tools and user interfaces we build around them. They describe ethical design patterns intended to allow the readers and contributors who use our AI products to understand how the algorithms work, provide feedback, and take control of their experience.

Looking forward

The technological, social, and regulatory landscape around AI is changing rapidly. The technology industry as a whole has only recently begun to acknowledge that the ethos of “move fast and break things” is neither an effective nor an ethical way to build complex and powerful products capable of having unexpected, disruptive, and often devastating impacts on individuals, communities, and social institutions. As a non-profit, mission-driven organization with a global reach, the Wikimedia Foundation must hold itself to a higher standard. We can’t afford to build first and ask ethical questions later.

In many ways, the Wikimedia movement is ahead of the game here. We are a geographically and culturally diverse group of people united by a common cause. We already practice the kind of transparency, values-driven design, and consensus-based decision-making that are necessary to leverage the opportunities presented by AI technology while avoiding the damage it can cause. Because the Wikimedia Foundation serves as a steward for the code, content, and communities within the Movement, it is important that we consider the kinds of risks outlined in this white paper, and adopting solutions to anticipate and address them.

You can read more about this project on Meta-Wiki and view the white paper on Wikimedia Commons.

Jonathan T. Morgan, Senior Design Researcher, Wikimedia Foundation

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation