For the past few months, Silvia Gutiérrez and Giovanna Fontenelle (from the Culture and Heritage team at the Wikimedia Foundation) have been publishing a series of Diff posts analyzing the results of the collaborative session that sought to build a direct bridge between the Library-Wikidata community and WMF during the 2023 LD4 Conference on Linked Data.

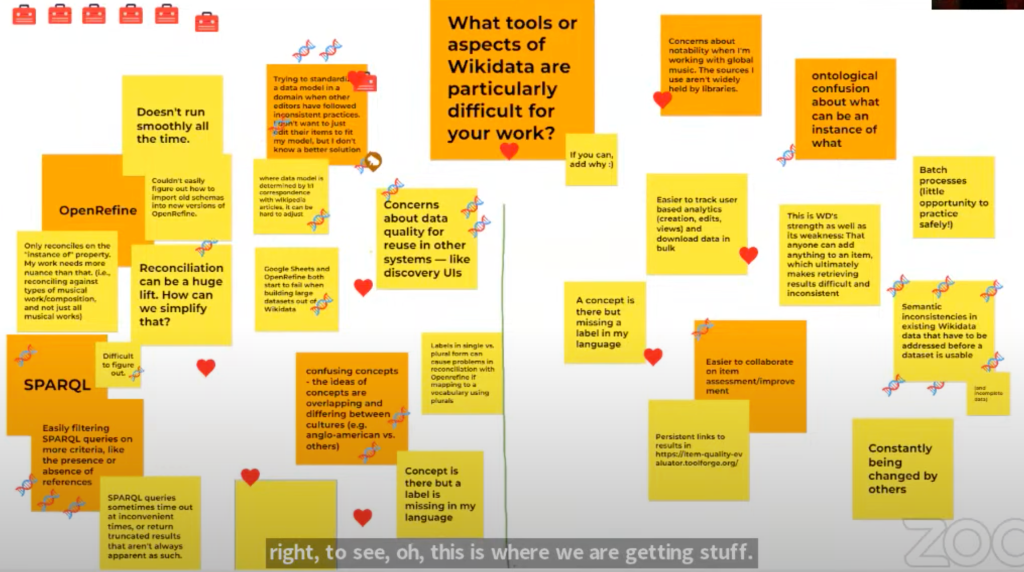

This is the fifth post in the series that will go deep into the fourth slide of the workshop: the challenges, especially in terms of tools, that participants found when working with Wikidata as librarians. In this case, we asked them to divide the answers into particular tools and aspects, plus the reasons why they thought this was a challenge. We also invited them to add emojis of a toolbox (🧰) and of a DNA (🧬) when they agreed with the content of the virtual post-it.

OpenRefine

The most commented tool on the Jamboard was OpenRefine, which is “…a free data wrangling tool that can be used to process, manipulate and clean tabular (spreadsheet) data and connect it with knowledge bases (…) It is widely used by librarians, in the cultural sector, by journalists and scientists, and is taught in many curricula and workshops around the world.” (OpenRefine page on Meta-Wiki). This tool is also heavily used by Wikimedians to batch upload data to Wikidata and, since 2022, to batch upload files and structured data to Wikimedia Commons as well. Interestingly, in our previous post –about tools people love or are excited about– OpenRefine was the tool that got the most votes, four in total. However, it does appear in this post as well.

In some of the comments, participants highlighted difficult aspects of the tool especially regarding Wikidata, such as: “[It c]an be challenging to reconcile really common names when there is not much data to differentiate one identity from another in authority data.” This is especially true on Wikidata, as there are a lot of names on the project and sometimes not enough context to differentiate between them. In this case, most people need to check them one by one to avoid mistakes. In this same line, participants also highlighted problems with the reconciliation of “instance of” property and the usage of old schemas. Someone even noted that “reconciliation can be a huge lift” and challenged “How can we simplify that?”

Still about OpenRefine, people also expressed problems like failures and slowness: “Doesn’t run smoothly all the time–especially when system is slow at times” and “Google Sheets and OpenRefine both start to fail when building large datasets out of Wikidata”, which are important considerations. While it might be hard to overcome some of these issues, it is also true that this is one of the most beloved and used tools, and now you can learn how to use it by going through the WikiLearn course “OpenRefine for Wikimedia Commons: the basics“, which is already available for anyone with a Wikimedia account.

SPARQL Query

The next aspect mentioned a lot in the Jamboard was SPARQL and, in total, it received five DNA emojis (🧬). These comments are related to the Wikidata Query Service (or the Wikimedia Commons Query Service), a platform that Wikimedians use to get information from Wikidata. In order to accomplish these information requests, this tool uses SPARQL, which is “…a semantic query language for databases” according to Wikipedia in English. This language is indeed difficult to learn as one of the comments highlighted: “Difficult to figure out (1 🧬)”.

As with OpenRefine, participants also highlighted some technical problems: “SPARQL queries sometimes time out at inconvenient times, or return truncated results that aren’t always apparent as such.” And also suggested how it could be improved: “Easily filtering SPARQL queries on more criteria, like the presence or absence of references.” This last comment received three DNA emojis (🧬).

How to use the Query Helper to edit a query (Jonas Kress (WMDE), CC BY-SA 4.0, via Wikimedia Commons)

Other aspects

The remaining aspects participants highlighted during the workshop, other than tools, cover general concepts related to Wikidata and it could be distributed into two groups:

1 – Semantic inconsistencies

As one of the comments with more 🧬 (7) put it, the most difficult part is the semantic inconsistencies. These are all the comments on the Jamboard that reflect this difficulty:

- “Semantic inconsistencies in existing Wikidata data that have to be addressed before a dataset is usable (and incomplete data);”

- “A concept is there but missing a label in my language;”

- “Ontological confusion about what can be an instance of what;”

- “Trying to standardize a data model in a domain when other editors have followed inconsistent practices. I don’t want to just edit their items to fit my model, but I don’t know a better solution;”

- “Where data model is determined by 1:1 correspondence with Wikipedia articles, it can be hard to adjust;”

- “Confusing concepts – the ideas of concepts are overlapping and differing between cultures (e.g. anglo-american vs. others);”

- “Labels in single vs. plural form can cause problems in reconciliation with OpenRefine if mapping to a vocabulary using plurals;”

- “This is WD’s strength as well as its weakness: That anyone can add anything to an item, which ultimately makes retrieving results difficult and inconsistent;”

- “Other people changing your data model afterwards (to be more consistent with their own)–not sure this is a problem but there can be disagreement.”

All of these concerns are extremely important and valid. These are difficulties that all Wikidatians go through on their way to understand and contribute to Wikidata, even at more advanced levels. A suggestion we can make at this point, to those who want to explore this topic a bit more, is to search Wikidata for a Wikidata:WikiProject that is aligned with the data modeling you are working with, in order to find out or establish yourself semantic consistencies. For example, the Wikidata:WikiProject Heritage institutions tried to establish which properties should be added to properly model Wikidata items for heritage institutions, such as museums, libraries, and archives.

2 – Notability

The remaining main comments from the Jamboard are related to the notability of data, both in the moment of adding new data and the one data that could be retrieved. These are all the comments on the Jamboard that reflect this difficulty:

- “Concerns about data quality for reuse in other systems — like discovery UIs;”

- “Concerns about notability when I’m working with global music. The sources I use aren’t widely held by libraries;”

- “Easier to collaborate on item assessment/improvement.”

The notability aspect is a difficult one, especially when in association with the semantic inconsistencies problem. One of the reasons why we need consistency is that we need to be able to talk to other databases and be reusable.

Other than that, the notability aspect is also a concern as it might exclude topics, sources, or references that are not considered notable enough to be available because of certain biases (gender, language, origin, etc). To understand Wikidata’s notability, check Wikidata:Notability. At the bottom, the Wikidta community also adds: “If the data you’re trying to add falls outside of these notability guidelines, (…) our sibling projects, Wikibase Cloud and Wikibase Suite could be a good home for your data. Go to wikiba.se to learn more about the options.”

This is the fifth of six blog posts! Do you want to read it from the beginning? Here’s the list of links to the previous posts:

- #LD42023 I: The Future of Wikidata + Libraries (A Workshop)

- #LD42023 II: Getting to Know Each Other, Librarians in the Wikidata World

- #LD42023 III: The Examples, Libraries Using Wikidata

- #LD42023 IV: Wikidata Tools everyone is talking about

- #LD42023 V: Main Challenges of Wikidata for Librarians (this post!)👈

- #LD42023 VI: Imagining a Wikidata Future for Librarians, Together

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation